An international team of researchers is calling for urgent improvements in deepfake detection technologies after uncovering critical flaws in widely used detection tools.

A study by CSIRO, Australia’s national science agency, and South Korea’s Sungkyunkwan University (SKKU) has assessed 16 leading detectors and found none could reliably identify real-world deepfakes. The study is published on the arXiv preprint server.

Deepfakes are artificial intelligence (AI) generated synthetic media that can manipulate images, videos, or audio to create hyper-realistic but false content, raising concerns about misinformation, fraud, and privacy violations.

CSIRO cybersecurity expert Dr. Sharif Abuadbba said the availability of generative AI has fueled the rapid rise in deepfakes, which are cheaper and easier to create than ever before.

“Deepfakes are increasingly deceptive and capable of spreading misinformation, so there is an urgent need for more adaptable and resilient solutions to detect them,” Dr. Abuadbba said.

“As deepfakes grow more convincing, detection must focus on meaning and context rather than appearance alone. By breaking down detection methods into their fundamental components and subjecting them to rigorous testing with real-world deepfakes, we’re enabling the development of tools better equipped to counter a range of scenarios.”

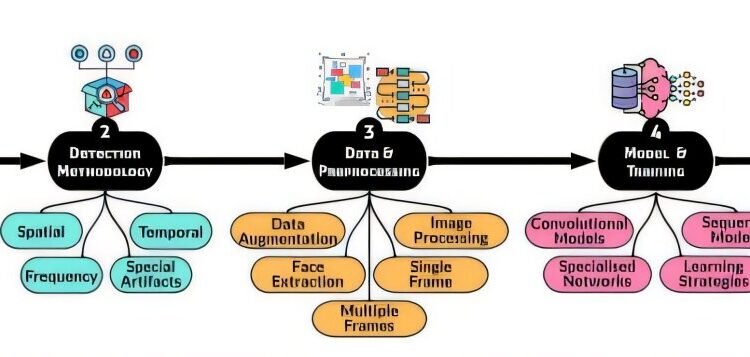

The researchers developed a five-step framework that evaluates detection tools based on deepfake type, detection method, data preparation, model training, and validation. It identifies 18 factors affecting accuracy, ranging from how data is processed to how models are trained and tested.

SKKU Professor Simon S. Woo said the collaboration between CSIRO and SKKU has advanced the field’s understanding of detection model vulnerabilities.

“This study has deepened our understanding of how deepfake detectors perform in real-world conditions, exposing major vulnerabilities and paving the way for more resilient solutions,” he said.

The study also found that many current detectors struggle when faced with deepfakes that fall outside their training data.

For example, the ICT (Identity Consistent Transformer) detector, which was trained on celebrity faces, was significantly less effective at detecting deepfakes featuring non-celebrities.

CSIRO cybersecurity expert Dr. Kristen Moore explained that using multiple detectors and diverse data sources strengthens deepfake detection.

“We’re developing detection models that integrate audio, text, images, and metadata for more reliable results,” Dr. Moore said. “Proactive strategies, such as fingerprinting techniques that track deepfake origins, enhance detection and mitigation efforts.

“To keep pace with evolving deepfakes, detection models should also look to incorporate diverse datasets, synthetic data, and contextual analysis, moving beyond just images or audio.”

More information:

Binh M. Le et al, SoK: Systematization and Benchmarking of Deepfake Detectors in a Unified Framework, arXiv (2024). DOI: 10.48550/arxiv.2401.04364

arXiv

Citation:

Research reveals ‘major vulnerabilities’ in deepfake detectors (2025, March 12)

retrieved 12 March 2025

from https://techxplore.com/news/2025-03-reveals-major-vulnerabilities-deepfake-detectors.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.