Large Language Models (LLMs) have rapidly become an integral part of our digital landscape, powering everything from chatbots to code generators. However, as these AI systems increasingly rely on proprietary, cloud-hosted models, concerns over user privacy and data security have escalated. How can we harness the power of AI without exposing sensitive data?

A recent study, “Entropy-Guided Attention for Private LLMs,” by Nandan Kumar Jha, a Ph.D. candidate at the NYU Center for Cybersecurity (CCS), and Brandon Reagen, Assistant Professor in the Department of Electrical and Computer Engineering and a member of CCS, introduces a novel approach to making AI more secure.

The paper was presented at the AAAI Workshop on Privacy-Preserving Artificial Intelligence (PPAI 25) in early March and is available on the arXiv preprint server.

The researchers delve into a fundamental, yet often overlooked, property of neural networks: entropy—the measure of information uncertainty within a system. Their work proposes that by understanding entropy’s role in AI architectures, we can improve the privacy, efficiency, and reliability of LLMs.

The privacy paradox in AI

When we interact with AI models—whether asking a virtual assistant for medical advice or using AI-powered legal research tools—our input data is typically processed in the cloud. This means user queries, even if encrypted in transit, are ultimately decrypted for processing by the model. This presents a fundamental privacy risk: sensitive data could be exposed, either unintentionally through leaks or maliciously via cyberattacks.

To design efficient private LLMs, researchers must rethink the architecture these models are built on. However, simply removing nonlinearities destabilizes training and disrupts the core functionality of components like the attention mechanism.

“Nonlinearities are the lifeblood of neural networks,” says Jha. “They enable models to learn rich representations and capture complex patterns.”

The field of Private Inference (PI) aims to solve this problem by allowing AI models to operate directly on encrypted data, ensuring that neither the user nor the model provider ever sees the raw input. However, PI comes with significant computational costs. Encryption methods that protect privacy also make computation more complex, leading to higher latency and energy consumption—two major roadblocks to practical deployment.

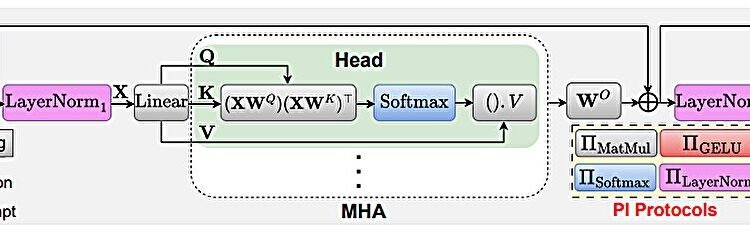

To tackle these challenges, Jha and Reagen’s research focuses on the nonlinear transformations within AI models. In deep learning, nonlinear functions like activation functions play a crucial role in shaping how models process information. The researchers explore how these nonlinearities affect entropy—specifically, the diversity of information being passed through different layers of a transformer model.

“Our work directly tackles this challenge and takes a fundamentally different approach to privacy,” says Jha. “It removes nonlinear operations while preserving as much of the model’s functionality as possible.”

Using Shannon’s entropy as a quantitative measure, they reveal two key failure modes that occur when nonlinearity is removed:

- Entropy collapse (Deep Layers): In the absence of nonlinearity, later layers in the network fail to retain useful information, leading to unstable training.

- Entropic overload (Early Layers): Without proper entropy control, earlier layers fail to efficiently utilize the Multi-Head Attention (MHA) mechanism, reducing the model’s ability to capture diverse representations.

This insight is new—it suggests that entropy isn’t just a mathematical abstraction but a key design principle that determines whether a model can function properly.

A new AI blueprint

Armed with these findings, the researchers propose an entropy-guided attention mechanism that dynamically regulates information flow in transformer models. Their approach consists of Entropy Regularization—a new technique that prevents early layers from being overwhelmed by excessive information—and PI-Friendly Normalization—alternative methods to standard layer normalization that help stabilize training while preserving privacy.

By strategically regulating the entropy of attention distributions, they were able to maintain coherent, trainable behavior even in drastically simplified models, which ensures that attention weights remain meaningful, avoiding degenerate patterns that commonly arise once nonlinearity is removed, where a disproportionate number of heads exhibit extreme behavior—collapsing to near one-hot attention (low entropy) or diffusing attention uniformly (high entropy)—both of which impair the model’s ability to focus and generalize.

This work bridges the gap between information theory and architectural design, establishing entropy dynamics as a principled guide for developing efficient privacy-preserving LLMs. It represents a crucial step toward making privacy-preserving AI more practical and efficient in real-world applications. By bridging the gap between information theory and neural architecture design, their work offers a roadmap for developing AI models that are not only more private but also computationally efficient.

The team has also open-sourced their implementation, inviting researchers and developers to experiment with their entropy-guided approach.

More information:

Nandan Kumar Jha et al, Entropy-Guided Attention for Private LLMs, arXiv (2025). DOI: 10.48550/arxiv.2501.03489

arXiv

NYU Tandon School of Engineering

Citation:

Cracking the code of private AI: The role of entropy in secure language models (2025, March 26)

retrieved 26 March 2025

from https://techxplore.com/news/2025-03-code-private-ai-role-entropy.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.