Robots that can closely imitate the actions and movements of humans in real-time could be incredibly useful, as they could learn to complete everyday tasks in specific ways without having to be extensively pre-programmed on these tasks. While techniques to enable imitation learning considerably improved over the past few years, their performance is often hampered by the lack of correspondence between a robot’s body and that of its human user.

Researchers at U2IS, ENSTA Paris recently introduced a new deep learning-based model that could improve the motion imitation capabilities of humanoid robotic systems. This model, presented in a paper pre-published on arXiv, tackles motion imitation as three distinct steps, designed to reduce the human-robot correspondence issues reported in the past.

“This early-stage research work aims to improve online human-robot imitation by translating sequences of joint positions from the domain of human motions to a domain of motions achievable by a given robot, thus constrained by its embodiment,” Louis Annabi, Ziqi Ma, and Sao Mai Nguyen wrote in their paper. “Leveraging the generalization capabilities of deep learning methods, we address this problem by proposing an encoder-decoder neural network model performing domain-to-domain translation.”

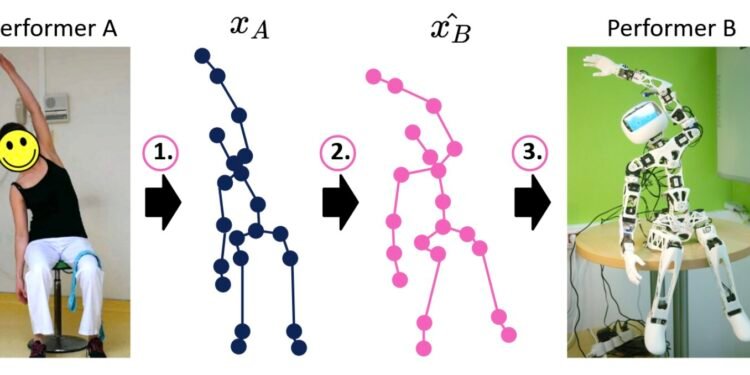

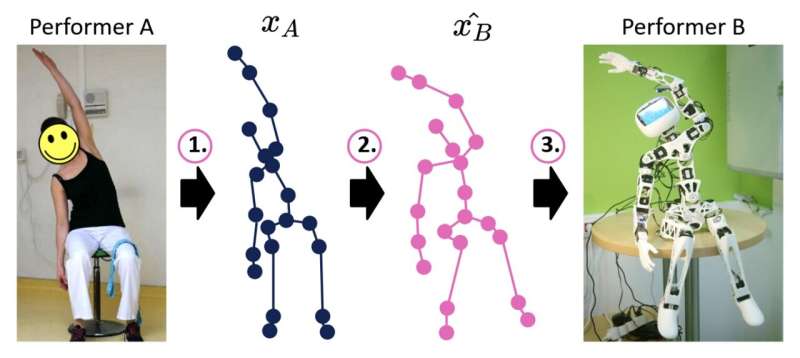

The model developed by Annabi, Ma, and Nguyen separates the human-robot imitation process into three key steps, namely pose estimation, motion retargeting and robot control. Firstly, it utilizes pose estimation algorithms to predict sequences of skeleton-joint positions that underpin the motions demonstrated by human agents.

Subsequently, the model translates this predicted sequence of skeleton-joint positions into similar joint positions that can realistically be produced by the robot’s body. Finally, these translated sequences are used to plan the motions of the robot, theoretically resulting in dynamic movements that could help the robot perform the task at hand.

“To train such a model, one could use pairs of associated robot and human motions, [yet] such paired data is extremely rare in practice, and tedious to collect,” the researchers wrote in their paper. “Therefore, we turn towards deep learning methods for unpaired domain-to-domain translation, that we adapt in order to perform human-robot imitation.”

Annabi, Ma, and Nguyen evaluated their model’s performance in a series of preliminary tests, comparing it to a simpler method to reproduce joint orientations that is not based on deep learning. Their model did not achieve the results they were hoping for, suggesting that current deep learning methods might not be able to successfully re-target motions in real-time.

The researchers now plan to conduct further experiments to identify potential issues with their approach, so that they can tackle them and adapt the model to improve its performance. The team’s findings so far suggest that while unsupervised deep learning techniques can be used to enable imitation learning in robots, their performance is still not good enough for them to be deployed on real robots.

“Future work will extend the current study in three directions: Further investigating the failure of the current method, as explained in the last section, creating a dataset of paired motion data from human-human imitation or robot-human imitation, and improving the model architecture in order to obtain more accurate retargeting predictions,” the researchers conclude in their paper.

More information:

Louis Annabi et al, Unsupervised Motion Retargeting for Human-Robot Imitation, arXiv (2024). DOI: 10.48550/arxiv.2402.05115

arXiv

© 2024 Science X Network

Citation:

Testing an unsupervised deep learning model for robot imitation of human motions (2024, March 10)

retrieved 10 March 2024

from https://techxplore.com/news/2024-03-unsupervised-deep-robot-imitation-human.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.