Over the past few decades, robots have gradually started making their way into various real-world settings, including some malls, airports and hospitals, as well as a few offices and households.

For robots to be deployed on a larger scale, serving as reliable everyday assistants, they should be able to complete a wide range of common manual tasks and chores, such as cleaning, washing the dishes, cooking and doing the laundry.

Training machine learning algorithms that allow robots to successfully complete these tasks can be challenging, as it often requires extensive annotated data and/or demonstration videos showing humans the tasks. Devising more effective methods to collect data to train robotics algorithms could thus be highly advantageous, as it could help to further broaden the capabilities of robots.

Researchers at New York University and UC Berkeley recently introduced EgoZero, a new system to collect ego-centric demonstrations of humans completing specific manual tasks. This system, introduced in a paper posted to the arXiv preprint server, relies on the use of Project Aria glasses, the smart glasses for augmented reality (AR) developed by Meta.

“We believe that general-purpose robotics is bottlenecked by a lack of internet-scale data, and that the best way to address this problem would be to collect and learn from first-person human data,” Lerrel Pinto, senior author of the paper, told Tech Xplore.

“The primary objectives of this project were to develop a way to collect accurate action-labeled data for robot training, optimize for the ergonomics of the data collection wearables needed, and transfer human behaviors into robot policies with zero robot data.”

EgoZero, the new system developed by Pinto and his colleagues, relies on Project Aria smart glasses to easily collect video demonstrations of humans completing tasks while performing robot-executable actions, captured from the point of view of the person wearing the glasses.

These demonstrations can in turn be used to train robotics algorithms on new manipulation policies, which could in turn allow robots to successfully complete various manual tasks.

“Unlike prior works that require multiple calibrated cameras, wrist wearables, or motion capture gloves, EgoZero is unique in that it is able to extract these 3D representations with only smart glasses (Project Aria smart glasses),” explained Ademi Adeniji, student and co-lead author of the paper.

“As a result, robots can learn a new task from as little as 20 minutes of human demonstrations, with no teleoperation.”

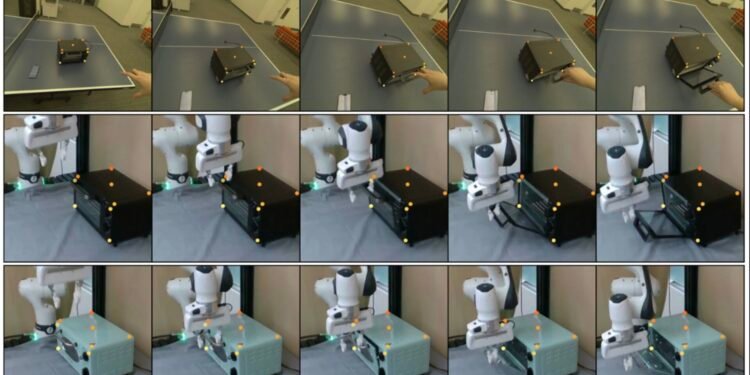

To evaluate their proposed system, the researchers used it to collect video demonstrations of simple actions that are commonly completed in a household environment (e.g., opening an oven door) and then used these demonstrations to train a machine learning algorithm.

The machine learning algorithm was then deployed on Franka Panda, a robotic arm with a gripper attached at its end. Notably, they found that the robotic arm successfully completed most of the tasks they tested it on, even if the algorithm planning its movements underwent minimal training.

“EgoZero’s biggest contribution is that it can transfer human behaviors into robot policies with zero robot data, with just a pair of smart glasses,” said Pinto.

“It extends past work (Point Policy) by showing that 3D representations enable efficient robot learning from humans, but completely in-the-wild. We hope this serves as a foundation for future exploration of representations and algorithms to enable human-to-robot learning at scale.”

The code for the data collection system introduced by Pinto and his colleagues was published on GitHub and can be easily accessed by other research teams.

In the future, it could be used to rapidly collect datasets to train robotics algorithms, which could contribute to the further development of robots, ultimately facilitating their deployment in a greater number of households and offices worldwide.

“We now hope to explore the tradeoffs between 2D and 3D representations at a larger scale,” added Vincent Liu, student and co-lead author of the paper.

“EgoZero and past work (Point Policy, P3PO) have only explored single-task 3D policies, so it would be interesting to extend this framework of learning from 3D points in the form of a fine-tuned LLM/VLM, similar to how modern VLA models are trained.”

Written for you by our author Ingrid Fadelli, edited by Lisa Lock, and fact-checked and reviewed by Robert Egan—this article is the result of careful human work. We rely on readers like you to keep independent science journalism alive. If this reporting matters to you, please consider a donation (especially monthly). You’ll get an ad-free account as a thank-you.

More information:

Vincent Liu et al, EgoZero: Robot Learning from Smart Glasses, arXiv (2025). DOI: 10.48550/arxiv.2505.20290

arXiv

© 2025 Science X Network

Citation:

Training robots without robots: Smart glasses capture first-person task demos (2025, June 12)

retrieved 12 June 2025

from https://techxplore.com/news/2025-06-robots-smart-glasses-capture-person.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.