It reads. It talks. It collates mountains of data and recommends business decisions. Today’s artificial intelligence might seem more human than ever. However, AI still has several critical shortcomings.

“As impressive as ChatGPT and all these current AI technologies are, in terms of interacting with the physical world, they’re still very limited. Even in things they do, like solve math problems and write essays, they take billions and billions of training examples before they can do them well,” explains Cold Spring Harbor Laboratory (CSHL) NeuroAI Scholar Kyle Daruwalla.

Daruwalla has been searching for new, unconventional ways to design AI that can overcome such computational obstacles. And he might have just found one.

The key was moving data. Nowadays, most of modern computing’s energy consumption comes from bouncing data around. In artificial neural networks, which are made up of billions of connections, data can have a very long way to go.

So, to find a solution, Daruwalla looked for inspiration in one of the most computationally powerful and energy-efficient machines in existence—the human brain.

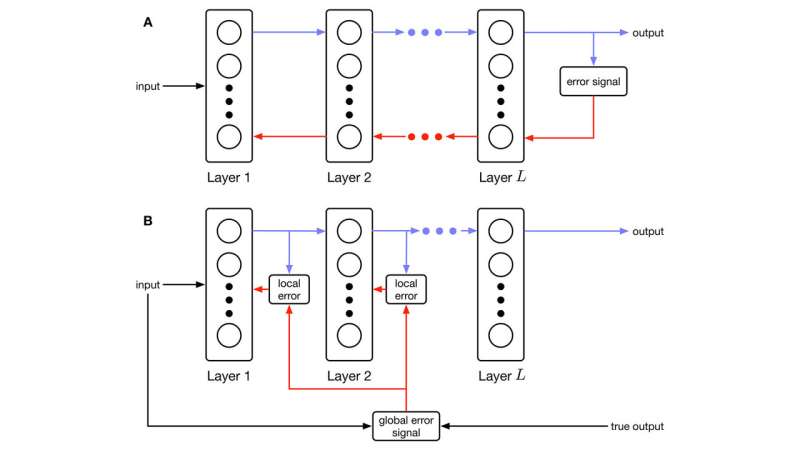

Daruwalla designed a new way for AI algorithms to move and process data much more efficiently, based on how our brains take in new information. The design allows individual AI “neurons” to receive feedback and adjust on the fly rather than wait for a whole circuit to update simultaneously. This way, data doesn’t have to travel as far and gets processed in real time.

“In our brains, our connections are changing and adjusting all the time,” Daruwalla says. “It’s not like you pause everything, adjust, and then resume being you.”

The findings are published in the journal Frontiers in Computational Neuroscience.

The new machine-learning model provides evidence for a yet unproven theory that correlates working memory with learning and academic performance. Working memory is the cognitive system that enables us to stay on task while recalling stored knowledge and experiences.

“There have been theories in neuroscience of how working memory circuits could help facilitate learning. But there isn’t something as concrete as our rule that actually ties these two together. And so that was one of the nice things we stumbled into here. The theory led out to a rule where adjusting each synapse individually necessitated this working memory sitting alongside it,” says Daruwalla.

Daruwalla’s design may help pioneer a new generation of AI that learns like we do. That would not only make AI more efficient and accessible—it would also be somewhat of a full-circle moment for neuroAI. Neuroscience has been feeding AI valuable data since long before ChatGPT uttered its first digital syllable. Soon, it seems, AI may return the favor.

More information:

Kyle Daruwalla et al, Information bottleneck-based Hebbian learning rule naturally ties working memory and synaptic updates, Frontiers in Computational Neuroscience (2024). DOI: 10.3389/fncom.2024.1240348

Cold Spring Harbor Laboratory

Citation:

Researchers develop new, more energy-efficient way for AI algorithms to process data (2024, June 20)

retrieved 20 June 2024

from https://techxplore.com/news/2024-06-energy-efficient-ai-algorithms.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.