A team of AI researchers at Open AI, has developed a tool for use by AI developers to measure AI machine-learning engineering capabilities. The team has written a paper describing their benchmark tool, which it has named MLE-bench, and posted it on the arXiv preprint server. The team has also posted a web page on the company site introducing the new tool, which is open-source.

As computer-based machine learning and associated artificial applications have flourished over the past few years, new types of applications have been tested. One such application is machine-learning engineering, where AI is used to conduct engineering thought problems, to carry out experiments and to generate new code.

The idea is to speed up the development of new discoveries or to find new solutions to old problems all while reducing engineering costs, allowing for the production of new products at a swifter pace.

Some in the field have even suggested that some types of AI engineering could lead to the development of AI systems that outperform humans in conducting engineering work, making their role in the process obsolete. Others in the field have expressed concerns regarding the safety of future versions of AI tools, wondering about the possibility of AI engineering systems discovering that humans are no longer needed at all.

The new benchmarking tool from OpenAI does not specifically address such concerns but does open the door to the possibility of developing tools meant to prevent either or both outcomes.

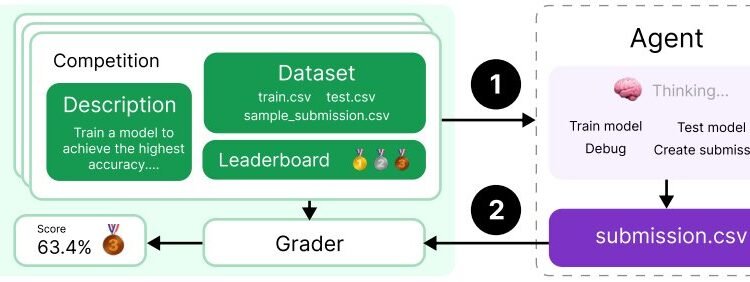

The new tool is essentially a series of tests—75 of them in all and all from the Kaggle platform. Testing involves asking a new AI to solve as many of them as possible. All of them are real-world based, such as asking a system to decipher an ancient scroll or develop a new type of mRNA vaccine.

The results are then reviewed by the system to see how well the task was solved and if its result could be used in the real world—whereupon a score is given. The results of such testing will no doubt also be used by the team at OpenAI as a yardstick to measure the progress of AI research.

Notably, MLE-bench tests AI systems on their ability to conduct engineering work autonomously, which includes innovation. To improve their scores on such bench tests, it is likely that the AI systems being tested would have to also learn from their own work, perhaps including their results on MLE-bench.

More information:

Jun Shern Chan et al, MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering, arXiv (2024). DOI: 10.48550/arxiv.2410.07095

openai.com/index/mle-bench/

arXiv

© 2024 Science X Network

Citation:

OpenAI unveils benchmarking tool to measure AI agents’ machine-learning engineering performance (2024, October 15)

retrieved 15 October 2024

from https://techxplore.com/news/2024-10-openai-unveils-benchmarking-tool-ai.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.