Generative models, artificial neural networks that can generate images or texts, have become increasingly advanced in recent years. These models can also be advantageous for creating annotated images to train algorithms for computer vision, which are designed to classify images or objects contained within them.

While many generative models, particularly generative adversarial networks (GANs), can produce synthetic images that resemble those captured by cameras, reliably controlling the content of the images they produce has proved challenging. In many cases, the images generated by GANs do not meet the exact requirements of users, which limits their use for various applications.

Researchers at Seoul National University of Science and Technology recently introduced a new image generation framework designed to incorporate the content users would like generated images to contain. This framework, introduced in a paper published on the arXiv preprint server, allows users to exert greater control over the image generation process, producing images that are more aligned with the ones they were envisioning.

“Remarkable progress has been achieved in image generation with the introduction of generative models,” wrote Giang H. Le, Anh Q. Nguyen and the researchers in their paper.

“However, precisely controlling the content in generated images remains a challenging task due to their fundamental training objective. This paper addresses this challenge by proposing a novel image generation framework explicitly designed to incorporate desired content in output images.”

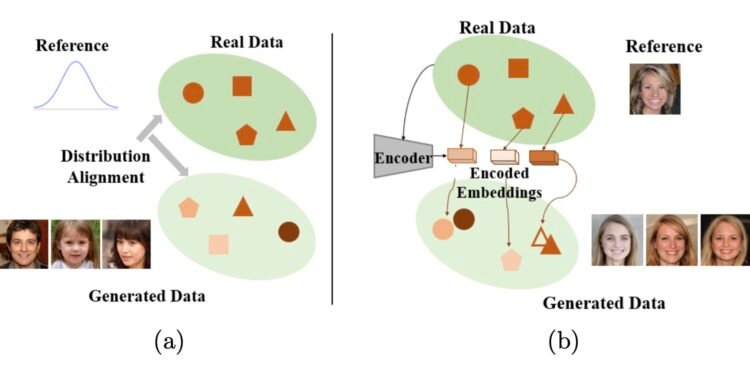

In contrast with many existing models for generating images, the framework developed by Le, Nguyen and their colleagues can be fed a real-world image, which it then uses to guide the image generation process. The content of the synthetic images it generates thus closely resembles that of the reference image, even if the images themselves are different.

“The framework utilizes advanced encoding techniques, integrating subnetworks called content fusion and frequency encoding modules,” wrote Le, Nguyen and their colleagues.

“The frequency encoding module first captures features and structures of reference images by exclusively focusing on selected frequency components. Subsequently, the content fusion module generates a content-guiding vector that encapsulates desired content features.”

The framework developed by the researchers thus has two distinct components. The first is an encoder, a module that extracts content-related features from the reference image fed to the model. The second is a content fusion module, which generates vectors for newly generated images that are guided by the content extracted from the reference image.

“During the image generation process, content-guiding vectors from real images are fused with projected noise vectors,” wrote the authors. “This ensures the production of generated images that not only maintain consistent content from guiding images but also exhibit diverse stylistic variations.”

Le, Nguyen and their colleagues evaluated their framework’s performance in a series of tests, also comparing the images it generated to those created by a conventional GAN-based model. The images they used to train the model and as references to guide the image generation process were derived from various datasets, including the Flickr-Faces-High Quality, Animal Faces High Quality, and Large-scale Scene Understanding datasets.

The findings of these initial tests were highly promising, as the new framework was found to produce synthetic images that better matched a reference image in terms of content compared to those created by the conventional GAN-based model. On average, the images generated by the framework preserved 85% of the reference image’s attributes.

This recent study could inform the development of models for image generation that create images more aligned with the expectations of users. These models could be used to compile carefully tailored datasets to train image classification algorithms, but could also be integrated into AI-powered platforms for designers and other creative professionals.

More information:

Giang H. Le et al, Content-Aware Preserving Image Generation, arXiv (2024). DOI: 10.48550/arxiv.2411.09871

arXiv

© 2024 Science X Network

Citation:

Novel framework can generate images more aligned with user expectations (2024, December 3)

retrieved 3 December 2024

from https://techxplore.com/news/2024-11-framework-generate-images-aligned-user.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.