Ever since OpenAI released ChatGPT at the end of last year, terms like generative AI and large language models have been on everyone’s lips. But when you dig beneath the hype, large language models generally require a lot of expensive GPU chips to run. SambaNova is introducing a chip today that it purports will reduce that cost significantly, while handling a 5 trillion parameter model.

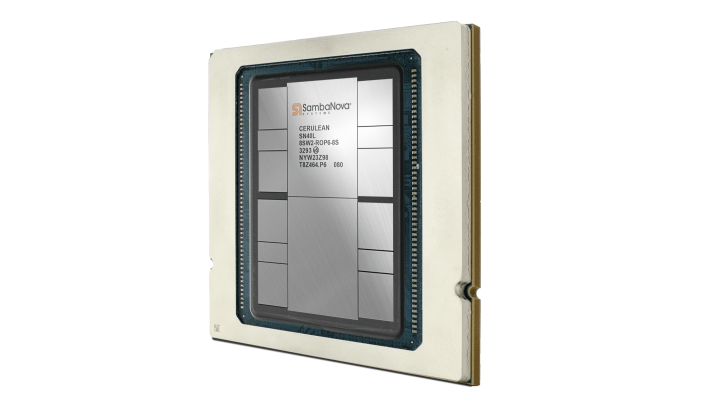

SambaNova might not be a household name like Google, Microsoft or Amazon, but it has been building a full stack AI solution that includes hardware and software for several years now, and has raised over $1 billion, per Crunchbase, from investors like Intel Capital, BlackRock and Softbank Vision Fund. Today, the company unveiled its latest chip, the SN40L, the fourth generation of its in-house custom AI chips.

Company founder and CEO Rodrigo Liang says the idea behind building their own chips is to control the underlying hardware for maximum efficiency, something that’s become increasingly necessary as the world shifts to processing these resource-intensive large language models.

“We need to stop using this brute force approach of using more and chips for large language model use cases. So we went off and created the SN40L that is tuned specifically for very, very large language models to power AI for enterprises,” Liang told TechCrunch.

“How many resources does it take to actually run a trillion printer model like a GPT-4? I can do it in eight sockets, I can deliver it on prem, and I can deliver fully optimized on that hardware, and you get state of the art accuracy,” he said.

That’s a bold claim, but Liang says his new chips are 30x more efficient by reducing the number of chips required to power these models, and because the chips are built for the SambaNova software, they are configured to run at maximum efficiency for that software. In fact, he claims that running the same trillion parameter model on competitor chips would take 50-200 chips, while claiming that SambaNova has reduced that to just 8 chips.

SambaNova delivers a full stack hardware and software solution with everything included to build AI applications. “We’re in the business of creating AI assets, which allows you to quickly train models based on your private data, and that becomes an asset for the company,” he said.

He points out that even though SambaNova is helping customers train the model, it remains under their ownership. “So what we tell the customer is, it’s your data and your model. After we have trained the model on your data, we actually give the ownership of the model to the company in perpetuity.”

By providing the hardware and software solution in the form of a multi-year subscription, Liang says the customer has more cost certainty over their AI projects.

The new SN40L chip is available starting today, but is fully backward compatible with previous generation chips, according to the company.