Artificial intelligence not only affords impressive performance, but also creates significant demand for energy. The more demanding the tasks for which it is trained, the more energy it consumes.

Víctor López-Pastor and Florian Marquardt, two scientists at the Max Planck Institute for the Science of Light in Erlangen, Germany, present a method by which artificial intelligence could be trained much more efficiently. Their approach relies on physical processes instead of the digital artificial neural networks currently used. The work is published in the journal Physical Review X.

The amount of energy required to train GPT-3, which makes ChatGPT an eloquent and apparently well-informed Chatbot, has not been revealed by Open AI, the company behind that artificial intelligence (AI). According to the German statistics company Statista, this would require 1,000 megawatt hours—about as much as 200 German households with three or more people consume annually. While this energy expenditure has allowed GPT-3 to learn whether the word “deep” is more likely to be followed by the word “sea” or “learning” in its data sets, by all accounts it has not understood the underlying meaning of such phrases.

Neural networks on neuromorphic computers

In order to reduce the energy consumption of computers, and particularly AI-applications, in the past few years several research institutions have been investigating an entirely new concept of how computers could process data in the future. The concept is known as neuromorphic computing. Although this sounds similar to artificial neural networks, it in fact has little to do with them as artificial neural networks run on conventional digital computers.

This means that the software, or more precisely the algorithm, is modeled on the brain’s way of working, but digital computers serve as the hardware. They perform the calculation steps of the neuronal network in sequence, one after the other, differentiating between processor and memory.

“The data transfer between these two components alone devours large quantities of energy when a neural network trains hundreds of billions of parameters, i.e., synapses, with up to one terabyte of data,” says Marquardt, director of the Max Planck Institute for the Science of Light and professor at the University of Erlangen.

The human brain is entirely different and would probably never have been evolutionarily competitive, had it worked with an energy efficiency similar to that of computers with silicon transistors. It would most likely have failed due to overheating.

The brain is characterized by undertaking the numerous steps of a thought process in parallel and not sequentially. The nerve cells, or more precisely the synapses, are both processor and memory combined. Various systems around the world are being treated as possible candidates for the neuromorphic counterparts to our nerve cells, including photonic circuits utilizing light instead of electrons to perform calculations. Their components serve simultaneously as switches and memory cells.

A self-learning physical machine optimizes its synapses independently

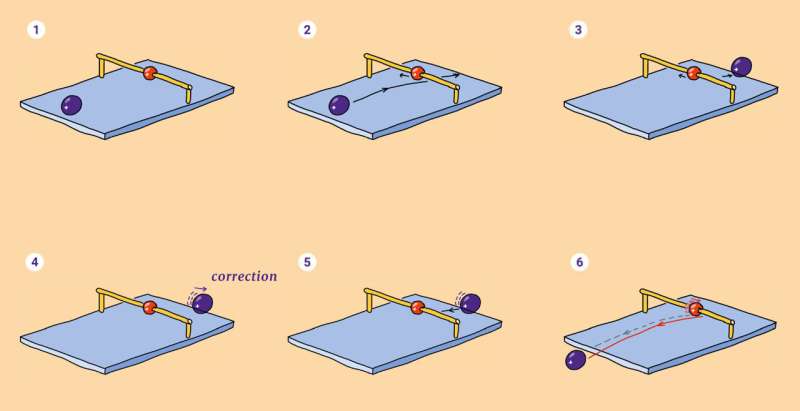

Together with López-Pastor, a doctoral student at the Max Planck Institute for the Science of Light, Marquardt has now devised an efficient training method for neuromorphic computers. “We have developed the concept of a self-learning physical machine,” explains Florian Marquardt. “The core idea is to carry out the training in the form of a physical process, in which the parameters of the machine are optimized by the process itself.”

When training conventional artificial neural networks, external feedback is necessary to adjust the strengths of the many billions of synaptic connections. “Not requiring this feedback makes the training much more efficient,” says Marquardt. Implementing and training an artificial intelligence on a self-learning physical machine would not only save energy, but also computing time.

“Our method works regardless of which physical process takes place in the self-learning machine, and we do not even need to know the exact process,” explains Marquardt. “However, the process must fulfill a few conditions. Most importantly it must be reversible, meaning it must be able to run forwards or backwards with a minimum of energy loss.”

“In addition, the physical process must be non-linear, meaning sufficiently complex,” says Marquardt. Only non-linear processes can accomplish the complicated transformations between input data and results. A pinball rolling over a plate without colliding with another is a linear action. However, if it is disturbed by another, the situation becomes non-linear.

Practical test in an optical neuromorphic computer

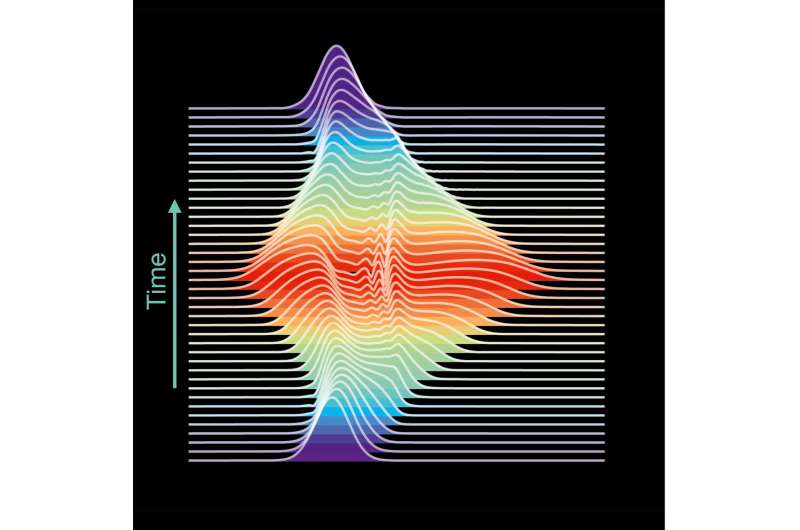

Examples of reversible, non-linear processes can be found in optics. Indeed, López-Pastor and Marquardt are already collaborating with an experimental team developing an optical neuromorphic computer. This machine processes information in the form of superimposed light waves, whereby suitable components regulate the type and strength of the interaction. The researchers’ aim is to put the concept of the self-learning physical machine into practice.

“We hope to be able to present the first self-learning physical machine in three years,” says Florian Marquardt. By then, there should be neural networks which think with many more synapses and are trained with significantly larger amounts of data than today’s.

As a consequence there will likely be an even greater desire to implement neural networks outside conventional digital computers and to replace them with efficiently trained neuromorphic computers. “We are therefore confident that self-learning physical machines have a strong chance of being used in the further development of artificial intelligence,” says the physicist.

More information:

Víctor López-Pastor et al, Self-Learning Machines Based on Hamiltonian Echo Backpropagation, Physical Review X (2023). DOI: 10.1103/PhysRevX.13.031020

Provided by

Max-Planck-Institut für die Physik des Lichts

Citation:

New physics-based self-learning machines could replace current artificial neural networks and save energy (2023, September 8)

retrieved 8 September 2023

from https://techxplore.com/news/2023-09-physics-based-self-learning-machines-current-artificial.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.