Ever had a song stuck in your head? New research from a team of neuroscientists at the University of California, Berkeley, maps out regions of the human brain that make earworms—and music perception more broadly—possible.

The research results, recently published in the journal PLOS Biology, show how human brains respond to a musical performance. After our ears hear a song snippet, it gets converted into neuronal activity by our cochlea, the spiral cavity of the inner ear. Ludovic Bellier—at the time of the research a postdoc at the Helen Wills Neuroscience Institute, University of California, Berkeley—studies how neural networks in the brain ultimately “hear” these sounds.

For his group’s experiment, Bellier and colleagues analyzed the brain activity of people listening to a song by the psychedelic rock band Pink Floyd. The 29 participants were epilepsy patients at Albany Medical Center in Albany, N.Y. The patients, who had electrical sensors implanted on the surface of their brains as a part of their medical treatment, were asked to listen attentively to Pink Floyd’s song “Another Brick in the Wall.” As the patients listened, the researchers recorded the oscillating electrical potentials from each on-brain electrode. These measurements, called electrocorticogram recordings (ECoG), are broadband signals reflecting neural activity in a small region of the brain surrounding each electrode.

The researchers hypothesized that the ECoG signals recorded from each patient reflected how their brains were working to perceive the music played for them. To test their hypothesis and to see which regions of the patients’ brains were most involved in musical perception, the team fit models to reconstruct the song’s audio spectrogram—an image showing the distribution of sound energy across frequencies over time—when presented with ECoG features as inputs. If the models are able to accurately reconstruct the spectrogram after regularized training, then the ECoG features must reflect some information about the music played to the patient.

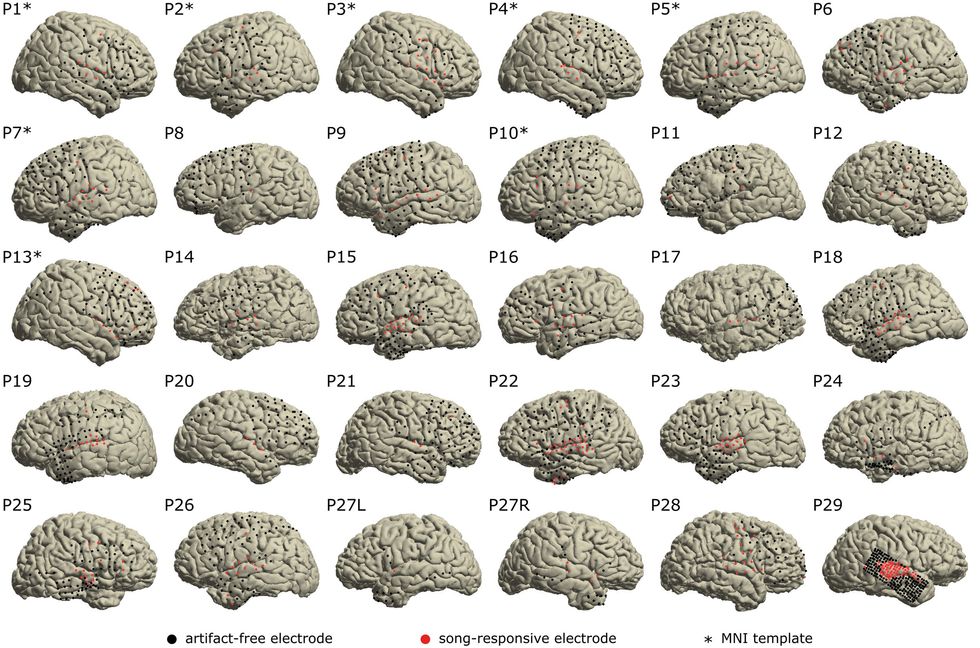

Neuroscientists attached electrical sensors to the surface of 29 epilepsy patients’ brains to study their response to auditory stimuli. Here are the locations of the sensors, with the red dots indicating an electrode whose brain region recorded neural activity in response to hearing music.University of California, Berkeley

Neuroscientists attached electrical sensors to the surface of 29 epilepsy patients’ brains to study their response to auditory stimuli. Here are the locations of the sensors, with the red dots indicating an electrode whose brain region recorded neural activity in response to hearing music.University of California, Berkeley

According to Bellier, the team were able to verify their hypothesis. Using multiple types of models to reconstruct the auditory signal, the scientists could decode at least a partially audible likeness of the song’s output audio. The researchers say their results are, to the best of their knowledge, the very first publication of musical audio regressed out from ECoG data. Though the correlations between original and reconstructed audio were still far from 1 to 1—the statistical r-squared value was 0.325—some of the models’ outputs could be recognized as the classic song, if distantly, when it was converted back into an audio waveform. (See below for the original audio clip plus two of the researchers’ reconstructions of that audio clip from the subjects’ neural signals.) A more complex and nonlinear reconstruction algorithm—a so-called multilayer perceptron, or a simple two-layered artificial neural network—resulted in higher r-squared fit values of 0.429 and clearer audio reconstructions.

To test which regions of the brain were most involved in musical processing, the researchers trained models on the same audio reconstruction task while removing electrode inputs. Taken together, the ECoG data from all patients covered much of the brain’s total surface, so the scientists were able to consider many potential regions of the brain as crucial players in the task of generating the experience of hearing music.

By comparing the change in performance between models that involved all brain regions and models that didn’t—sometimes called an ablation study—the researchers were able to tell how much information each brain region provided. Put differently, if there wasn’t a big change in reconstruction accuracy when a particular region’s electrodes were removed, then that region wasn’t as involved in musical perception.

From this process, the researchers found that removing brainwave data collected from an area on both the left and right sides of the brain called the superior temporal gyrus (STG) caused the biggest drop in reconstruction accuracy. This region, coincidentally located near the ears, is also known to play an important role in speech processing, so it may in fact play a larger part in the brain’s capacity to process complex, structured sound.

The team also found that removing data features from the left and right STGs individually had substantially different effects on reconstruction accuracy. This indicated that activity from the STG on the right side of participants’ brains had more information about the song than the left STG did. Curiously, previous research in the field shows that the very opposite seems to be the case for speech processing, where the left STG often does more work than the right STG. “In 95 percent of right-handed individuals, speech is located strongly in the left hemisphere,” says Bellier. “What we show in the paper is that music is more distributed [between left and right STG], but with a right dominance”—meaning that the right STG processed more musical information than the left.

“There’s a really easy follow-up we can do,” Bellier says. Namely, the present study considers only high-frequency brainwave information—70 to 150 hertz. However, Bellier says, audio features computed from lower frequency ranges of ECoG signals can also encode important information. Which is why he says the team plans to repeat the same analyses in the current paper on different frequency ranges of neural activity. Present and future work from the group will add, as the paper concludes, “another brick in the wall of our understanding of music processing in the human brain.”

From Your Site Articles

Related Articles Around the Web