Could computers ever learn more like humans do, without relying on artificial intelligence (AI) systems that must undergo extremely expensive training?

Neuromorphic computing might be the answer. This emerging technology features brain-inspired computer hardware that could perform AI tasks much more efficiently with far fewer training computations using much less power than conventional systems. Consequently, neuromorphic computers also have the potential to reduce reliance on energy-intensive data centers and bring AI inference and learning to mobile devices.

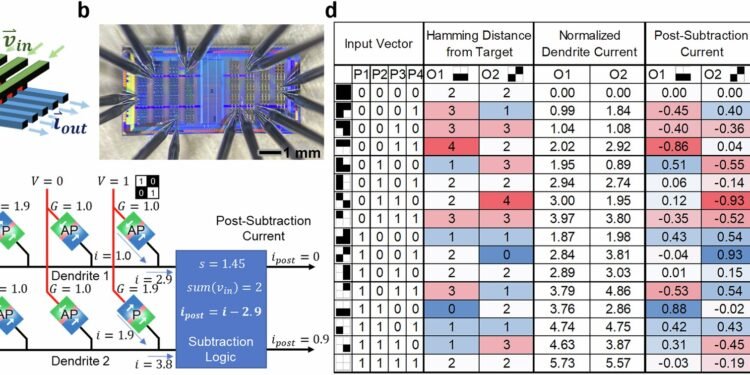

Dr. Joseph S. Friedman, associate professor of electrical and computer engineering at The University of Texas at Dallas, and his team of researchers in the NeuroSpinCompute Laboratory have taken an important step forward in building a neuromorphic computer by creating a small-scale prototype that learns patterns and makes predictions using fewer training computations than conventional AI systems. Their next challenge is to scale up the proof-of-concept to larger sizes.

“Our work shows a potential new path for building brain-inspired computers that can learn on their own,” Friedman said. “Since neuromorphic computers do not need massive amounts of training computations, they could power smart devices without huge energy costs.”

The team, which included researchers from Everspin Technologies Inc. and Texas Instruments, described the prototype in a study published in Communications Engineering.

Conventional computers and graphical processing units keep memory storage separate from the information processing. As a result, they cannot make AI inferences as efficiently as the human brain can. They also require large amounts of labeled data and an enormous number of complex training computations. The costs of these training computations can be hundreds of millions of dollars.

Neuromorphic computers integrate memory storage with processing, which allows them to perform AI operations with much greater efficiency and lower costs. Neuromorphic hardware is inspired by the brain, where networks of neurons and synapses process and store information, respectively. The synapses form the connections between neurons, strengthening or weakening based on patterns of activity. This allows the brain to adapt continuously as it learns.

Friedman’s approach builds on a principle proposed by neuropsychologist Dr. Donald Hebb, referred to as Hebb’s law: neurons that fire together, wire together.

“The principle that we use for a computer to learn on its own is that if one artificial neuron causes another artificial neuron to fire, the synapse connecting them becomes more conductive,” Friedman said.

A major innovation in Friedman’s design is the use of magnetic tunnel junctions (MTJs), nanoscale devices that consist of two layers of magnetic material separated by an insulating layer. Electrons can travel, or tunnel, through this barrier more easily when the magnetizations of the layers are aligned in the same direction and less easily when they are aligned in opposite directions.

In neuromorphic systems, MTJs can be connected in networks to mimic the way the brain processes and learns patterns. As signals pass through MTJs in a coordinated manner, their connections adjust to strengthen certain pathways, much as synaptic connections in the brain are reinforced during learning. The MTJs’ binary switching makes them reliable for storing information, resolving a challenge that has long impeded alternative neuromorphic approaches.

More information:

Peng Zhou et al, Neuromorphic Hebbian learning with magnetic tunnel junction synapses, Communications Engineering (2025). DOI: 10.1038/s44172-025-00479-2

University of Texas at Dallas

Citation:

Neuromorphic computer prototype learns patterns with fewer computations than traditional AI (2025, October 29)

retrieved 29 October 2025

from https://techxplore.com/news/2025-10-neuromorphic-prototype-patterns-traditional-ai.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.