The development of affordable and highly performing sensors can have crucial implications for robotics research, as it could improve perception to help boost robot manipulation and navigation. In recent years, engineers have introduced a wide range of advanced touch sensor devices, which can improve the ability of robots to detect tactile signals, using the information they gather to guide their actions.

Researchers at New York University introduced AnySkin, a low-cost and durable sensor that is easy to assemble and integrate in robotic systems. This sensor, introduced in a paper pre-published on arXiv, is far more accessible than many other tactile sensors introduced in recent years and could thus open new opportunities for robotics research.

“Touch is fundamental to the way humans interact with the world around them, but in contemporary robotics, the sense of touch lags far behind vision, and I have been trying to understand why for the past few years,” Raunaq Bhirangi, co-author of the paper, told Tech Xplore.

“The most common reasons we’ve heard from roboticists are: ‘It’s too difficult to integrate into my setup,’ ‘How do I train a neural network with this?’ ‘I have to use the same copy of the sensor for data collection and evaluation—what if it rips midway?’ AnySkin was expressly designed to address each of these concerns.”

AnySkin, the new magnetic tactile sensor designed by Bhirangi and his colleagues, is an updated version of a sensor that the researchers introduced in a previous paper, called ReSkin. The new sensor builds on ReSkin’s simplistic design, yet it also features better signal consistency and a physical separation between the device’s electronics and its sensing interface.

AnySkin can be assembled in just a few seconds and can be used to learn artificial neural network models with very little to no pre-processing. Compared to ReSkin, it also collects tactile signals with greater consistency and can be easily and quickly fixed if accidentally damaged.

“If you’re trying to teach your robot to perform exciting tasks and accidentally rip the skin, you can replace your skin in 10 seconds and get on with your experiment,” said Bhirangi. “AnySkin consists of two main components—the skin and the electronics. The skin is a magnetic elastomer made by curing a mix of magnetic particles with silicone, followed by magnetization using a pulse magnetizer.”

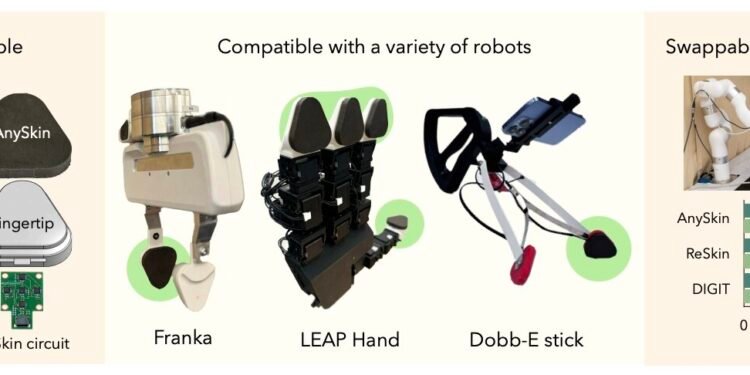

The unique self-adhering design of the AnySkin sensor enables greater flexibility in how the sensor is integrated. This means that it can be simply stretched and inserted onto various surfaces to equip them with sensing capabilities.

The sensor is also highly versatile, as it can be easily fabricated in different shapes and forms and assembled. AnySkin can also be simply peeled off from surfaces and replaced if damaged.

In initial tests, the researchers found that their sensor performed remarkably well, with performances comparable to those of other well-established tactile sensors. Notably, they also observed that different AnySkin sensors exhibit very similar performances and sensing responses, which suggests that they could be reliably reproduced and deployed on a large-scale.

“We used machine learning to train some robot models end-to-end, that take in raw signal from AnySkin along with images from different viewpoints and use this information to perform some really precise tasks—locate a socket strip and insert a plug into the first socket, locate a credit card machine and swipe a card through it, and locate a USB port and insert a USB stick into it,” said Bhirangi.

“While it was interesting to see that we could perform these precise tasks even when the locations of the socket strip/card machine/USB port were varied, what was even more exciting was the fact that you could swap out the skin, and our learned models would continue to work well. This kind of generalizability opens a host of possibilities.”

In the future, AnySkin could be integrated on a wider range of robotic systems and tested in additional scenarios. The researchers think that it would be highly suited for collecting large amounts of tactile data and using it to train large-scale deep learning models similar to those underpinning computer vision and natural language processing (NLP).

“We now plan to integrate AnySkin into different robot setups, beyond simple robot grippers to multifingered robot hands, and data collection devices like the Robot Utility Models stick and sensorized gloves,” added Bhirangi. “We are also looking into better ways to leverage touch information to improve visuotactile control for fine-grained robot manipulation.”

More information:

Raunaq Bhirangi et al, AnySkin: Plug-and-play Skin Sensing for Robotic Touch, arXiv (2024). DOI: 10.48550/arxiv.2409.08276

arXiv

© 2024 Science X Network

Citation:

Low-cost touch sensor shows promise for large-scale robotics applications (2024, October 15)

retrieved 15 October 2024

from https://techxplore.com/news/2024-10-sensor-large-scale-robotics-applications.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.