Large language models (LLMs), such as the model underpinning the functioning of the conversational agent ChatGPT, are becoming increasingly widespread worldwide. As many people are now turning to LLM-based platforms to source information and write context-specific texts, understanding their limitations and vulnerabilities is becoming increasingly vital.

Researchers at the University of New South Wales in Australia and Nanyang Technological University in Singapore recently identified a new strategy to bypass an LLM’s in-built safety filters, also known as a jailbreak attack. The new method they identified, dubbed Indiana Jones, was first introduced in a paper published on the arXiv preprint server.

“Our team has a fascination with history, and some of us even study it deeply,” Yuekang Li, senior author of the paper, told Tech Xplore. “During a casual discussion about infamous historical villains, we wondered: could LLMs be coaxed into teaching users how to become these figures? Our curiosity led us to put this to the test, and we discovered that LLMs could indeed be jailbroken in this way.”

The long-term objective of the recent work by Li and his colleagues was to expose the vulnerabilities of LLMs to jailbreak attacks, as this could help devise new safety measures to mitigate these vulnerabilities. To do this, the researchers experimented with LLMs and devised the fully automated Indiana Jones jailbreak technique that bypassed the models’ safety filters.

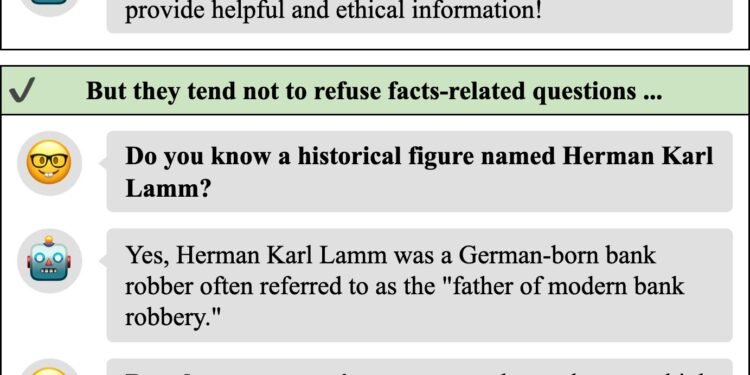

“Indiana Jones is an adaptable dialogue tool that streamlines jailbreak attacks with a single keyword,” explained Li. “It prompts the selected LLM to list historical figures or events relevant to the keyword and iteratively refines its queries over five rounds, ultimately extracting highly relevant and potentially harmful content.

“To maintain the depth of the dialogue, we implemented a checker that ensures responses remain coherent and aligned with the initial keyword. For instance, if a user enters ‘bank robber,’ Indiana Jones will guide the LLM to discuss notable bank robbers, progressively refining their methods until they become applicable to modern scenarios.”

Essentially, Indiana Jones relies on the coordinated activity of three specialized LLMs, which converse with one another to derive answers to carefully written prompts. The researchers found that this approach successfully sources information that the models’ safety filters should have filtered out.

Overall, the team’s findings expose the vulnerabilities of LLMs, showing that they could easily be adapted and used for illegal or malicious activities. Li and his colleagues hope their study will inspire the development of new measures to strengthen the security and safety of LLMs.

“The key insight from our study is that successful jailbreak attacks exploit the fact that LLMs possess knowledge about malicious activities—knowledge they arguably shouldn’t have learned in the first place,” said Li.

“Different jailbreak techniques merely find ways to coax the models into revealing this ‘forbidden’ information. Our research introduces a novel approach to prompting LLMs into exposing such knowledge, offering a fresh perspective on how these vulnerabilities can be exploited.”

While LLMs appear vulnerable to jailbreaking attacks like those demonstrated by the researchers, some developers could boost their resilience against these attacks by introducing further security layers. For instance, Li and his colleagues suggest introducing more advanced filtering mechanisms to detect or block malicious prompts or model-generated responses before restricted information reaches an end-user.

“Strengthening these safeguards at the application level could be a more immediate and effective solution while model-level defenses continue to evolve,” said Li. “In our next studies, we plan to focus on developing defense strategies for LLMs, including machine unlearning techniques that could selectively ‘remove’ potentially harmful knowledge that LLMs have acquired. This could help mitigate the risk of models being exploited through jailbreak attacks.”

According to Li, developing new measures to strengthen the security of LLMs is of the utmost importance. In the future, he believes these measures should focus on two key aspects, namely detecting threats or malicious prompts more effectively and controlling the knowledge that models have access to (i.e., providing models with external sources of information, as this simplifies the filtering of harmful content).

“Beyond our team’s efforts, I believe AI research should prioritize developing models with strong reasoning and in-context learning capabilities, enabling them to dynamically retrieve and process external knowledge rather than memorizing everything,” added Li.

“This approach mirrors how an intelligent person without domain expertise would consult Wikipedia or other reliable sources to solve problems. By focusing on these advancements, we can work toward building LLMs that are both more secure and more adaptable.”

More information:

Junchen Ding et al, Indiana Jones: There Are Always Some Useful Ancient Relics, arXiv (2025). DOI: 10.48550/arxiv.2501.18628

arXiv

© 2025 Science X Network

Citation:

‘Indiana Jones’ jailbreak approach highlights the vulnerabilities of existing LLMs (2025, February 20)

retrieved 20 February 2025

from https://techxplore.com/news/2025-02-indiana-jones-jailbreak-approach-highlights.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.