An artificial intelligence chip from IBM is more than a dozen times as energy efficient as conventional microchips at speech recognition. Many controversial AI systems, including ChatGPT and other large language models, as well as generative AI now used to create video and images, may benefit from the device.

The accuracy of automated transcription has improved greatly in the past decade because of AI, IBM notes. However, the hardware used to help train and operate these and other AI systems is becoming more expensive and energy-hungry. To train its state-of-the-art AI GPT-3, OpenAI spent US $4.6 million to run 9,200 GPUs for two weeks.

A key obstacle is the energy and time lost shuttling vast amounts of data between processors and memory. The energy dissipated that way can be anywhere between three and 10,000 times as much as for the actual computation, notes Hechen Wang, a research scientist at Intel Labs in Hillsboro, Ore., who did not take part in the new study.

Brain-mimicking neuromorphic hardware often seeks to imitate the way biological neurons compute and store data. For instance, compute-in-memory or “analog AI“ microchips perform computations directly within memory.

Previous simulations from IBM suggested that analog AI could prove 40 to 140 times as energy efficient as the best GPUs for AI applications. Practical displays of these estimates were lacking until now.

In the new study, IBM researchers experimented with phase-change memory. This device relies on a material that, when hit with electrical pulses, can not only switch between amorphous and crystalline phases in a way analogous to the ones and zeroes of digital processors, but also to states lying between these values. This means phase-change memory can encode the results of multiply-accumulate (MAC) operations—the most basic computation in the deep neural networks driving the current explosion in AI—using just a few resistors or capacitors in the memory. Conventional approaches use hundreds or thousands of transistors, Wang notes.

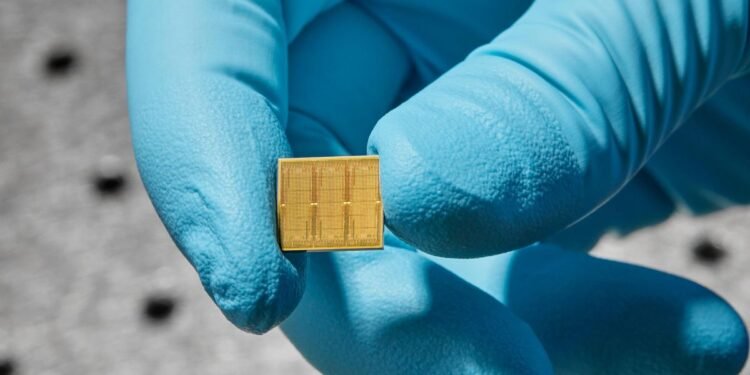

The researchers created a 14-nanometer microchip loaded with 35 million phase-change memory cells across 34 tiles. All in all, the device was capable of up to 12.4 trillion operations per second per watt, an energy efficiency dozens or even hundreds of times that of the most powerful CPUs and GPUs.

The scientists used two speech-recognition neural-network programs to examine the flexibility of their device. One small system, Google Speech Commands, was used to spot keywords for voice commands, a task where speed might be of the essence. A large system, Librispeech, was used to transcribe speech to text, where the ability to efficiently analyze large amounts of data might be the most valuable. They found that their device performed as accurately as neural networks run on conventional hardware, while doing the job seven times as fast, for Google Speech Commands, and 14 times as energy efficiently, for Librispeech.

Intel Labs’ Wang notes this new microchip can support transformers, the type of neural network behind the current large language models (LLMs) powering chatbots such as ChatGPT. Essentially a supercharged version of the autocomplete feature that smartphones use to predict the rest of a word a person is typing, ChatGPT has passed law and business school exams, successfully answered interview questions for software-coding jobs, written real estate listings, and developed ad content.

Transformers are also the key building block underlying generative AI, Wang says. Generative AI systems such as Stable Diffusion, Midjourney, and DALL-E have proven popular for the art they produce. The new chip “has the potential to substantially reduce the power and cost of LLMs and generative AI,” Wang says.

However, LLMs and generative AI have also ignited firestorms of criticism. For instance, ChatGPT has displayed many flaws, such as writing error-ridden articles, and generative AI is running into controversy for its implications for intellectual property law. (IBM declined comment for this story.)

In addition, the new chip did not include all the components needed to process the data it was given. “Therefore, its performance is limited by the communication between chips and other off-chip components,” Wang says.

There are five more steps along the path toward commercially viable analog AI, Wang argues. What’s required are new circuits beyond those for MAC operations, to reduce its reliance on digital chips; hybrid analog-digital architecture, to handle computations that analog devices cannot perform; customized compilers that can map tasks efficiently to available hardware, to maximize performance; tailored algorithms optimized for the errors that analog computing experience; and applications optimized for analog chips.

Ultimately, analog AI “is still in its infancy, and its development will be a long journey,” Wang says.

The IBM scientists detailed their findings online 23 August in the journal Nature.

From Your Site Articles

Related Articles Around the Web