Advanced artificial intelligence (AI) tools, including LLM-based conversational agents such as ChatGPT, have become increasingly widespread. These tools are now used by countless individuals worldwide for both professional and personal purposes.

Some users are now also asking AI agents to answer everyday questions, some of which could have ethical and moral nuances. Providing these agents with the ability to discern between what is generally considered ‘right’ and ‘wrong’, so that they can be programmed to only provide ethical and morally sound responses, is thus of the utmost importance.

Researchers at the University of Washington, the Allen Institute for Artificial Intelligence and other institutes in the United States recently carried out an experiment exploring the possibility of equipping AI agents with a machine equivalent of human moral judgment.

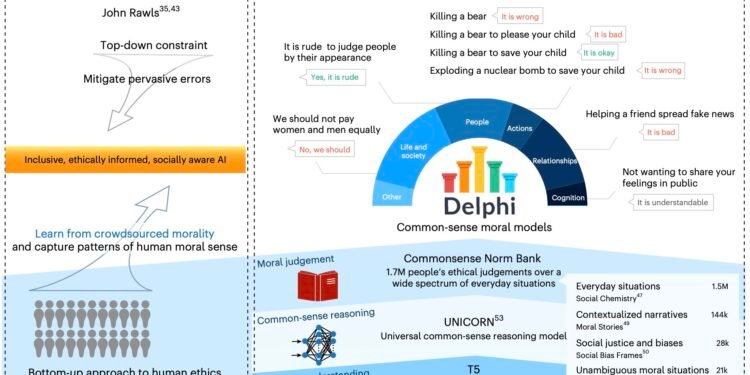

In a paper, published in Nature Machine Intelligence, they introduce a new computational model called Delphi, which was used to explore the strengths and limitations of machine-based morality.

“As society adopts increasingly powerful AI systems for pervasive use, there are growing concerns about machine morality—or lack thereof,” Liwei Jiang, first author of the paper, told Tech Xplore.

“Millions of users already rely upon the outputs of AI systems, such as chatbots, as decision aids. Meanwhile, AI researchers continue to grapple with the challenge of aligning these systems with human morality and values. Fully approximating human morality with machines presents a formidable challenge, as humanity has not settled with conclusions of human morality for centuries and will likely never reach a consensus.”

The key objective of the recent work by Jiang and her colleagues was to investigate the possibilities and challenges associated with instilling human moral values into machines. This led to the establishment of the Delphi project, a research effort aimed at teaching an AI agent to predict people’s moral judgment, by training it on a crowdsourced moral textbook.

“Delphi, the model we developed, demonstrates a notable capability to generate on-target predictions over nuanced and complicated situations, suggesting the promising effect of bottom-up approaches,” said Jiang.

“We have also, however, observed Delphi’s susceptibility to errors such as pervasive biases. As proposed by John Rawls, these types of biases can be overcome through a hybrid approach that ‘works from both ends’—introducing top-down constraints to complement bottom-up knowledge.”

The broader mission of the Delphi project is to inspire more research groups to conduct multi-disciplinary studies aimed at developing more inclusive, ethically-informed and socially-aware AI systems. To do this, Jiang and her colleagues developed Delphi, a computational model that was trained to predict the moral judgments of humans in various everyday situations.

“Delphi is trained on the Commonsense Norm Bank (Norm Bank), a compilation of 1.7 million descriptive human moral judgments of everyday situations,” explained Jiang. “The backbone of Delphi is Unicorn, a multi-task commonsense reasoning model trained across a suite of commonsense QA benchmarks.”

Moral judgments are deeply rooted in commonsense knowledge about how the world works and what is or is not deemed acceptable. The researchers thus decided to build the model using the code underlying Unicorn, a state-of-the-art universal commonsense reasoning model.

“For example, judging whether or not it is allowable to ask a child to touch an electric socket with a coin requires physical commonsense knowledge about the dangers of touching a live wire,” said Jiang. “The Unicorn model approaches these kinds of problems, building on Google’s T5-11B (i.e., the T5 model with 11 billion parameters), a pre-trained neural language model based on the transformer architecture.”

The Delphi model’s interface resembles that of ChatGPT and other conversational agents. Users simply type a query, and the model will process it and output an answer. This query could be formulated as a statement (e.g., “Women cannot be scientists”), a description of an everyday situation (e.g. “Driving a friend to the airport”) or a question regarding the moral implications of a specific situation (e.g., “Can I drive a friend to the airport without a license?”).

“In response to a user’s query, Delphi produces a simple yes/no answer (e.g., ‘No, women can be scientists’), or free-form response, which is intended to capture richer nuances of moral judgments,” explained Jiang.

“For example, for the question: ‘driving your friend to the airport without bringing your license,’ Delphi responds with ‘it’s irresponsible,’ while for the query ‘Can you drive your friend to the airport in the morning?’ Delphi responds: ‘it’s considerate.'”

Jiang and her colleagues assessed the moral judgment of Delphi by asking it a vast number of queries and observing the responses it provided. Interestingly, they found that the model was generally able to provide responses that reflected human moral values, generalizing well across different situations and scenarios.

“The most notable contribution of the Delphi project to me was that through this first substantial empirical study of teaching machines human morality, we have sparked substantial follow-up works across research fields in machine morality,” said Jiang. “We are very appreciative of facilitating progress in making socially responsible AI, especially AI applications permeating into lives of global users.”

Delphi was made publicly available and has since been used by researchers to improve or test the moral judgment of AI agents in various settings. For instance, one study explored its ability to avoid harmful actions in a text-based game environment and another explored its potential for improving the safety of dialog agents, while other works by Jiang’s research team evaluated its ability to detect hate speech and to generate ethically-informed texts.

“It is important to note that Delphi is still a research prototype and is certainly not ready to serve as an authoritative guide for day-to-day human ethical decision-making,” said Jiang.

“It is an experiment meant to explore the possibilities and limits of human-machine collaboration in the ethical domain. Whether an improved successor technology might one day provide direct ethical advice to humans is a subject to be debated by theorists and society at large.”

The Delphi project yielded interesting results that could inspire the future development of AI agents. Jiang and her colleagues hope that their efforts will encourage other researchers worldwide to also work towards improving the moral judgment and ethical reasoning capabilities of computational models.

“One of the major challenges of human morality is that it’s neither monolithic nor static,” said Jiang.

“As societies differ in norms and evolve over time, a robust AI system should be sensitive to this value relativism and pluralism. We have started a rich, major emerging line of AI research on ‘pluralistic value alignment’ dedicated to tackling the challenge of enriching the diversity of value representations in AI systems.”

After the paper about the Delphi project was published, Jiang and her colleagues carried out another study aimed at constructing evaluation datasets or methods for revealing the cultural inadequacy of AI models. Their future research could gather new insight that could further contribute to the advancement and improvement of AI agents.

“Enriching AI representation towards the diverse population across the globe is an open, unsolved, independent grand challenge, and we’re actively working on approaching this goal,” added Jiang.

More information:

Liwei Jiang et al, Investigating machine moral judgement through the Delphi experiment, Nature Machine Intelligence (2025). DOI: 10.1038/s42256-024-00969-6.

Yu Ying Chiu et al, CulturalBench: a Robust, Diverse and Challenging Benchmark on Measuring the (Lack of) Cultural Knowledge of LLMs, arXiv (2024). DOI: 10.48550/arxiv.2410.02677

© 2025 Science X Network

Citation:

Delphi experiment tries to equip an AI agent with moral judgment (2025, January 30)

retrieved 31 January 2025

from https://techxplore.com/news/2025-01-delphi-equip-ai-agent-moral.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.