Sunnyvale, Calif., AI supercomputer firm Cerebras says its next generation of waferscale AI chips can do double the performance of the previous generation while consuming the same amount of power. The Wafer Scale Engine 3 (WSE-3) contains 4 trillion transistors, a more than 50 percent increase over the previous generation thanks to the use of newer chipmaking technology. The company says it will use the WSE-3 in a new generation of AI computers, which are now being installed in a datacenter in Dallas to form a supercomputer capable of 8 exaflops (8 billion billion floating point operations per second). Separately, Cerebras has entered into a joint development agreement with Qualcomm that aims to boost a metric of price and performance for AI inference 10-fold.

The company says the CS-3 can train neural network models up to 24-trillion parameters in size, more than 10 times the size of today’s largest LLMs.

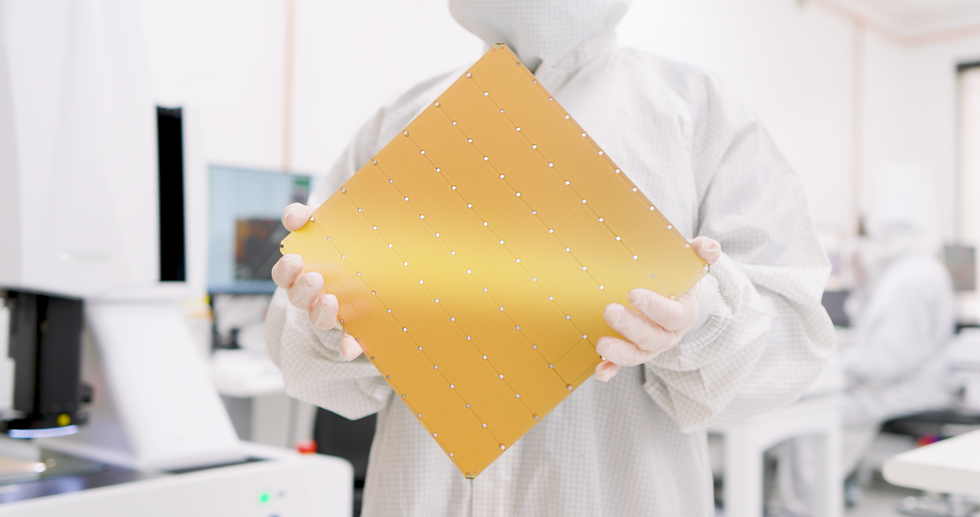

With WSE-3, Cerebras can keep its claim to producing the largest single chip in the world. Square-shaped with 21.5 centimeters to a side, it uses nearly an entire 300-millimeter wafer of silicon to make one chip. Chipmaking equipment is typically limited to producing silicon dies of no more than about 800 square millimeters. Chipmakers have begun to escape that limit by using 3D integration and other advanced packaging technology3D integration and other advanced packaging technology to combine multiple dies. But even in these systems, the transistor count is in the tens of billions.

As usual, such a large chip comes with some mind-blowing superlatives.

| Transistors | 4 trillion |

| Square millimeters of silicon | 46,225 |

| AI cores | 900,000 |

| AI compute | 125 petaflops |

| On chip memory | 44 gigabytes |

| Memory bandwidth | 21 petabytes |

| Network fabric bandwidth | 214 petabits |

You can see the effect of Moore’s Law in the succession of WSE chips. The first, debuting in 2019, was made using TSMC’s 16-nanometer tech. For WSE-2, which arrived in 2021, Cerebras moved on to TSMC’s 7-nm process. WSE-3 is built with the foundry giant’s 5-nm tech.

The number of transistors has more than tripled since that first megachip. Meanwhile, what they’re being used for has also changed. For example, the number of AI cores on the chip has significantly leveled off, as has the amount of memory and the internal bandwidth. Nevertheless, the improvement in performance in terms of floating-point operations per second (flops) has outpaced all other measures.

CS-3 and the Condor Galaxy 3

The computer built around the new AI chip, the CS-3, is designed to train new generations of giant large language models, 10 times larger than OpenAI’s GPT-4 and Google’s Gemini. The company says the CS-3 can train neural network models up to 24-trillion parameters in size, more than 10 times the size of today’s largest LLMs, without resorting to a set of software tricks needed by other computers. According to Cerebras, that means the software needed to train a one-trillion parameter model on the CS-3 is as straightforward as training a one billion parameter model on GPUs.

As many as 2,048 systems can be combined, a configuration that would chew through training the popular LLM Llama 70B from scratch in just one day. Nothing quite that big is in the works, though, the company says. The first CS-3-based supercomputer, Condor Galaxy 3 in Dallas, will be made up of 64 CS-3s. As with its CS-2-based sibling systems, Abu Dhabi’s G42 owns the system. Together with Condor Galaxy 1 and 2, that makes a network of 16 exaflops.

“The existing Condor Galaxy network has trained some of the leading open-source models in the industry, with tens of thousands of downloads,” said Kiril Evtimov, group CTO of G42 in a press release. “By doubling the capacity to 16 exaflops, we look forward to seeing the next wave of innovation Condor Galaxy supercomputers can enable.”

A Deal With Qualcomm

While Cerebras computers are built for training, Cerebras CEO Andrew Feldman says it’s inference, the execution of neural network models, that is the real limit to AI’s adoption. According to Cerebras estimates, if every person on the planet used ChatGPT, it would cost US $1 trillion annually—not to mention an overwhelming amount of fossil-fueled energy. (Operating costs are proportional to the size of neural network model and the number of users.)

So Cerebras and Qualcomm have formed a partnership with the goal of bringing the cost of inference down by a factor of 10. Cerebras says their solution will involve applying neural networks techniques such as weight data compression and sparsity—the pruning of unneeded connections. The Cerebras-trained networks would then run efficiently on Qualcomm’s new inference chip, the AI 100 Ultra, the company says.

From Your Site Articles

Related Articles Around the Web