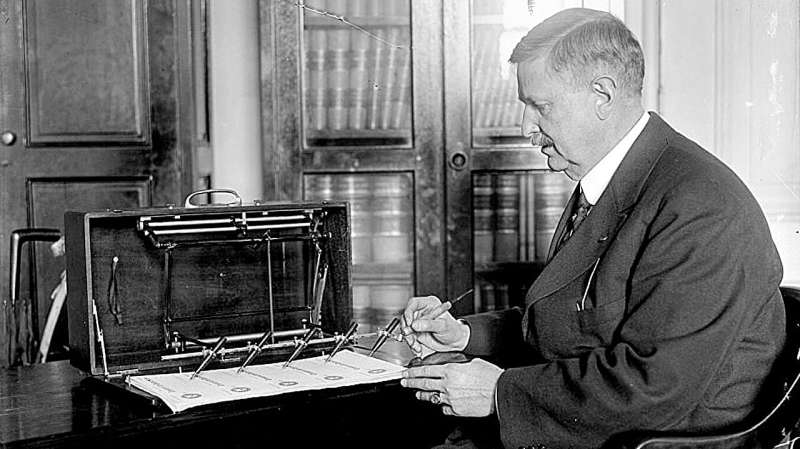

Long before people worried about the effects of ChatGPT on personal communication and social norms, there was the autopen and its precursors—automatic signature machines—that some feared would be misused to forge signatures and sign war declarations and other bills.

In a new analysis, Cornell researchers have examined three autopen controversies to see what they reveal about when it is okay—and not okay—to automate communication. They found that while the autopen made communication faster, its use instilled mistrust and reduced the perceived value of the signed item. They drew parallels to current anxieties around ChatGPT, whose use can lead to similar outcomes.

“These sorts of tools can be really useful and effective in making certain types of communications more efficient,” but context is important, said Pegah Moradi, a doctoral student in the field of information science and first author of the new study. “It feels like you’ve been tricked when you find out that these things were signed by autopen.”

Her study, “‘A Fountain Pen Come to Life’: The Anxieties of the Autopen,” appeared Jan. 1 in the International Journal of Communication. Karen Levy, associate professor of information science in the Cornell Ann S. Bowers College of Computing and Information Science and associate member of the Law School faculty, is senior author on the study.

While the U.S. government routinely uses autopens to sign contracts, letters and memos, controversy can erupt when celebrities secretly use it for autographs.

For example, in 2022, Bob Dylan put out a signed essay collection for $599, which came with a letter stating “the book you hold in your hand has been hand-signed by Bob Dylan.” It was actually signed by an autopen while Dylan was suffering from vertigo. When eagle-eyed fans discovered the replicated signatures and complained, Dylan apologized and the publisher offered a full refund.

This use of the autopen here is perceived as a violation by fans because the value of the signed object comes from Dylan holding it briefly in his hands. “It gives the artifact itself rarity—a value that you wouldn’t get when it’s a replica signature,” Moradi said.

In another scandal, many were outraged to learn that in 2004, defense secretary Donald Rumsfeld signed via autopen more than 1,000 condolence letters for families with a loved one killed in the Iraq War. Rumsfeld’s defense was that the machine allowed him to mail the letters more expeditiously. As Moradi and Levy point out, however, the effort needed to sign each letter is precisely what gives them significance.

“That you actually take the time to put pen to paper is important in this case,” Moradi said. “When you circumvent that with technology, you reduce that value.”

This is analogous to the outrage when Vanderbilt University employees used ChatGPT to create a condolence letter sent to students after a mass shooting at Michigan State University last year, Moradi said. Many students were angry that they didn’t take the time to write even a boilerplate message.

In the third case, celebrity chef Gordon Ramsay attempted to dodge responsibility for paying rent on his London pub by claiming the rental contract was invalid because his father-in-law, who is also his agent, signed it with an autopen. His defense failed, however, because electronic signatures are legal—they are “symbolic of a process of approval,” Moradi and Levy write. Even the U.S. Department of Justice determined that a bill is legal if the president’s signature is added via autopen.

Greater use and awareness of the autopen has engendered greater mistrust of the authenticity of celebrity signatures, Moradi said. In a similar phenomenon, named the “liar’s dividend,” the use of generative artificial intelligence (AI) to spread misinformation and fake images is causing people to be skeptical of fake and real items alike.

While experts can’t predict how generative AI will shift social expectations in the future, the researchers suggest that users take a lesson from the autopen, and be careful about when they choose to sacrifice authenticity for efficiency.

“Obviously, these new technologies can do things and unlock potential that we maybe wouldn’t have thought of 100 years ago,” she said. “But a lot of the changes that occur are just so similar to the changes that have happened throughout human history. We can glean a lot of new insights from older technologies.”

More information:

Pegah Moradi et al, ‘A Fountain Pen Come to Life’: The Anxieties of the Autopen (2024).

Cornell University

Citation:

Autopen shows perils of automation in communications (2024, February 1)

retrieved 1 February 2024

from https://techxplore.com/news/2024-02-autopen-perils-automation-communications.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.