Unsupervised domain adaptation has garnered a great amount of attention and research in past decades. Among all the deep-based methods, the autoencoder-based approach has achieved sound performance for its fast convergence speed and a no-label requirement. The existing methods of autoencoders just serially connect the features generated by different autoencoders, which poses challenges for discriminative representation learning and which fails to find the real cross-domain features.

To address these problems, a research team led by Zhu Yi published their research in Frontiers of Computer Science.

The team proposed a novel representation learning method based on an integrated autoencoder for unsupervised domain adaptation. A sparse autoencoder is introduced to combine the inter- and inner-domain features for minimizing deviations in different domains and improving the performance of unsupervised domain adaptation. Extensive experiments on three benchmark data sets clearly validate the effectiveness of the proposed method compared with several state-of-the-art baseline methods.

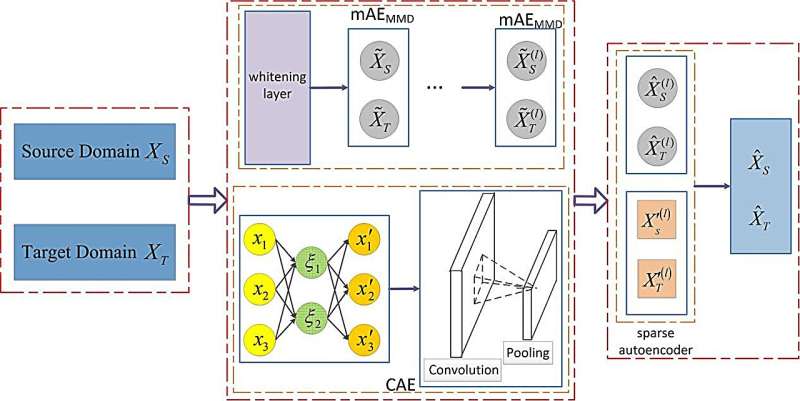

In the research, the researchers propose to obtain the inter- and inner-domain features with two different autoencoders. The higher-level and more abstract representations are extracted to capture different characteristics of original input data in source and target domains. A whitening layer is introduced for features processed in inter-domain representation learning. Then a sparse autoencoder is introduced to combine the inter- and inner-domain features to minimize deviations in different domains and improve the performance of unsupervised domain adaptation.

First, the marginalized AutoEncoder with Maximum Mean Discrepancy (mAEMMD) is introduced to map the original input data into the latent feature space for generating the inter-domain representations between source and target domains simultaneously.

Second, the Convolutional AutoEncoder (CAE) is utilized to obtain inner-domain representations and keep the relative location of features, which reserves spatial information of the input data in source and target domains.

Third, after higher-level features are obtained by these two different autoencoders, a sparse autoencoder is applied for the combination of these inter- and inner-domain representations, on which the new feature representations are utilized for minimizing deviations in different domains.

Future work should focus on learning representations of graph data, in which relationships are represented with an adjacent matrix, and exploring heterogeneous graph data relationships based on convolutional operation-based autoencoder networks.

More information:

Yi Zhu et al, Representation learning via an integrated autoencoder for unsupervised domain adaptation, Frontiers of Computer Science (2023). DOI: 10.1007/s11704-022-1349-5

Provided by

Frontiers Journals

Citation:

An approach for unsupervised domain adaptation based on an integrated autoencoder (2023, November 14)

retrieved 14 November 2023

from https://techxplore.com/news/2023-11-approach-unsupervised-domain-based-autoencoder.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.