Noise-canceling headphones are a godsend for living and working in loud environments. They automatically identify background sounds and cancel them out for much-needed peace and quiet. However, typical noise-canceling fails to distinguish between unwanted background sounds and crucial information, leaving headphone users unaware of their surroundings.

Shyam Gollakota, from the University of Washington, is an expert in using AI tools for real-time audio processing. His team created a system for targeted speech hearing in noisy environments and developed AI-based headphones that selectively filter out specific sounds while preserving others. He presents his work May 16, as part of a joint meeting of the Acoustical Society of America and the Canadian Acoustical Association, running May 13–17 at the Shaw Center located in downtown Ottawa, Ontario, Canada.

“Imagine you are in a park, admiring the sounds of chirping birds, but then you have the loud chatter of a nearby group of people who just can’t stop talking,” said Gollakota. “Now imagine if your headphones could grant you the ability to focus on the sounds of the birds while the rest of the noise just goes away. That is exactly what we set out to achieve with our system.”

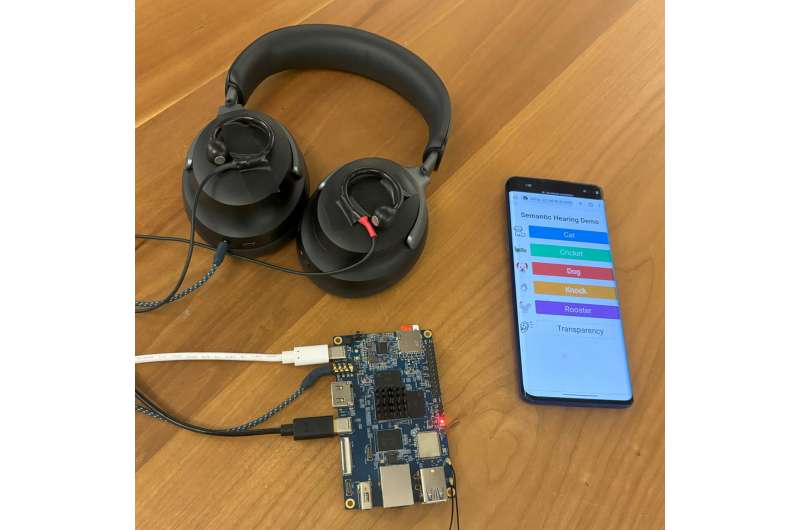

Gollakota and his team combined noise-canceling technology with a smartphone-based neural network trained to identify 20 different environmental sound categories. These include alarm clocks, crying babies, sirens, car horns, and birdsong. When a user selects one or more of these categories, the software identifies and plays those sounds through the headphones in real time while filtering out everything else.

Making this system work seamlessly was not an easy task, however.

“To achieve what we want, we first needed a high-level intelligence to identify all the different sounds in an environment,” said Gollakota.

“Then, we needed to separate the target sounds from all the interfering noises. If this is not hard enough, whatever sounds we extracted needed to sync with the user’s visual senses, since they cannot be hearing someone two seconds too late. This means the neural network algorithms must process sounds in real time in under a hundredth of a second, which is what we achieved.”

The team employed this AI-powered approach to focus on human speech. Relying on similar content-aware techniques, their algorithm can identify a speaker and isolate their voice from ambient noise in real time for clearer conversations.

Gollakota is excited to be at the forefront of the next generation of audio devices.

“We have a very unique opportunity to create the future of intelligent hearables that can enhance human hearing capability and augment intelligence to make lives better,” said Gollakota.

More information:

Technical program: https://eppro02.ativ.me/src/EventPilot/php/express/web/planner.php?id=ASASPRING24

Acoustical Society of America

Citation:

AI-powered noise-filtering headphones give users the power to choose what to hear (2024, May 16)

retrieved 16 May 2024

from https://techxplore.com/news/2024-05-ai-powered-noise-filtering-headphones.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.