AI models are always surprising us, not just in what they can do, but what they can’t, and why. An interesting new behavior is both superficial and revealing about these systems: they pick random numbers as if they’re human beings.

But first, what does that even mean? Can’t people pick a number randomly? And how can you tell if someone is doing so successfully or not? This is actually a very old and well known limitation we, humans, have: we overthink and misunderstand randomness.

Tell a person to predict heads or tails for 100 coin flips, and compare that to 100 actual coin flips — you can almost always tell them apart because, counter-intutively, the real coin flips look less random. There will often be, for example, six or seven heads or tails in a row, something almost no human predictor includes in their 100.

It’s the same when you ask someone to pick a number between 0 and 100. People almost never pick 1, or 100. Multiples of 5 are rare, as are numbers with repeating digits like 66 and 99. They often pick numbers ending in 7, generally from the middle somewhere.

There are countless examples of this kind of predictability in psychology. But that doesn’t make it any less weird when AIs do the same thing.

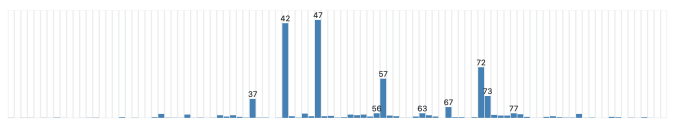

Yes, some curious engineers over at Gramener performed an informal but nevertheless fascinating experiment where they simply asked several major LLM chatbots to pick random a number between 0 and 100.

Reader, the results were not random.

All three models tested had a “favorite” number that would always be their answer when put on the most deterministic mode, but which appeared most often even at higher “temperatures,” raising the variability of their results.

OpenAI’s GPT-3.5 Turbo really likes 47. Previously, it liked 42 — a number made famous, of course, by Douglas Adams in The Hitchhiker’s Guide to the Galaxy as the answer to the life, the universe, and everything.

Anthropic’s Claude 3 Haiku went with 42. And Gemini likes 72.

More interestingly, all three models demonstrated human-like bias in the numbers they selected, even at high temperature.

All tended to avoid low and high numbers; Claude never went above 87 or below 27, and even those were outliers. Double digits were scrupulously avoided: no 33s, 55s, or 66s, but 77 showed up (ends in 7). Almost no round numbers — though Gemini did once, at the highest temperature, went wild and picked 0.

Why should this be? AIs aren’t human! Why would they care what “seems” random? Have they finally achieved consciousness and this is how they show it?!

No. The answer, as is usually the case with these things, is that we are anthropomorphizing a step too far. These models don’t care about what is and isn’t random. They don’t know what “randomness” is! They answer this question the same way they answer all the rest: by looking at their training data and repeating what was most often written after a question that looked like “pick a random number.” The more often it appears, the more often the model repeats it.

Where in their training data would they see 100, if almost no one ever responds that way? For all the AI model knows, 100 is not an acceptable answer to that question. With no actual reasoning capability, and no understanding of numbers whatsoever, it can only answer like the stochastic parrot it is.

It’s an object lesson in LLM habits, and the humanity they can appear to show. In every interaction with these systems, one must bear in mind that they have been trained to act the way people do, even if that was not the intent. That’s why pseudanthropy is so difficult to avoid or prevent.

I wrote in the headline that these models “think they’re people,” but that’s a bit misleading. They don’t think at all. But in their responses, at all times, they are imitating people, without any need to know or think at all. Whether you’re asking it for a chickpea salad recipe, investment advice, or a random number, the process is the same. The results feel human because they are human, drawn directly from human-produced content and remixed — for your convenience, and of course big AI’s bottom line.