Artists urgently need stronger defenses to protect their work from being used to train AI models without their consent.

So say a team of researchers who have uncovered significant weaknesses in two of the art protection tools most used by artists to safeguard their work.

According to their creators, Glaze and NightShade were both developed to protect human creatives against the invasive uses of generative AI.

The tools are popular with digital artists who want to stop AI models (like the AI art generator Stable Diffusion) from copying their unique styles without consent. Together, Glaze and NightShade have been downloaded almost 9 million times.

But according to an international group of researchers, these tools have critical weaknesses that mean they cannot reliably stop AI models from training on artists’ work.

The tools add subtle, invisible distortions (known as poisoning perturbations) to digital images. These “poisons” are designed to confuse AI models during training. Glaze takes a passive approach, hindering the AI model’s ability to extract key stylistic features. NightShade goes further, actively corrupting the learning process by causing the AI model to associate an artist’s style with unrelated concepts.

But the researchers have created a method—called LightShed—that can bypass these protections. LightShed can detect, reverse-engineer and remove these distortions, effectively stripping away the poisons and rendering the images usable again for generative AI model training.

It was developed by researchers at the University of Cambridge along with colleagues at the Technical University Darmstadt and the University of Texas at San Antonio. The researchers hope that by publicizing their work—which will be presented at the USENIX Security Symposium in Seattle in August—they can let creatives know that there are major issues with art protection tools.

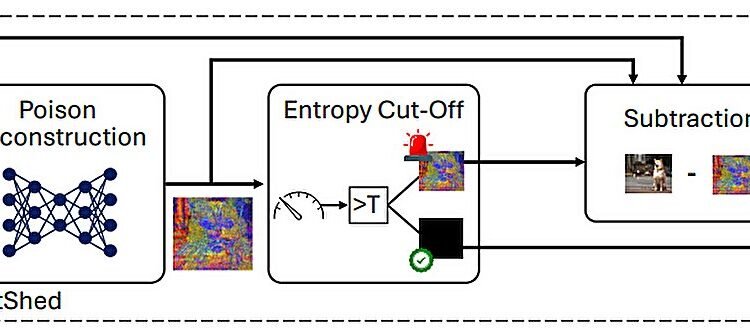

LightShed works through a three-step process. It first identifies whether an image has been altered with known poisoning techniques.

In a second, reverse engineering step, it learns the characteristics of the perturbations using publicly available poisoned examples. Finally, it eliminates the poison to restore the image to its original, unprotected form.

In experimental evaluations, LightShed detected NightShade-protected images with 99.98% accuracy and effectively removed the embedded protections from those images.

“This shows that even when using tools like NightShade, artists are still at risk of their work being used for training AI models without their consent,” said first author Hanna Foerster from Cambridge’s Department of Computer Science and Technology, who conducted the work during an internship at TU Darmstadt.

Although LightShed reveals serious vulnerabilities in art protection tools, the researchers stress that it was developed not as an attack on them—but rather an urgent call to action to produce better, more adaptive ones.

“We see this as a chance to co-evolve defenses,” said co-author Professor Ahmad-Reza Sadeghi from the Technical University of Darmstadt. “Our goal is to collaborate with other scientists in this field and support the artistic community in developing tools that can withstand advanced adversaries.”

The landscape of AI and digital creativity is rapidly evolving. In March this year, OpenAI rolled out a ChatGPT image model that could instantly produce artwork in the style of Studio Ghibli, the Japanese animation studio.

This sparked a wide range of viral memes—and equally wide discussions about image copyright, in which legal analysts noted that Studio Ghibli would be limited in how it could respond to this, since copyright law protects specific expression, not a specific artistic “style.”

Following these discussions, OpenAI announced prompt safeguards to block some user requests to generate images in the styles of living artists.

But issues over generative AI and copyright are ongoing, as highlighted by the copyright and trademark infringement case currently being heard in London’s high court.

Global photography agency Getty Images is alleging that London-based AI company Stability AI trained its image generation model on the agency’s huge archive of copyrighted pictures. Stability AI is fighting Getty’s claim and arguing that the case represents an “overt threat” to the generative AI industry.

And earlier this month, Disney and Universal announced they are suing AI firm Midjourney over its image generator, which the two companies said is a “bottomless pit of plagiarism.”

“What we hope to do with our work is to highlight the urgent need for a roadmap towards more resilient, artist-centered protection strategies,” said Foerster. “We must let creatives know that they are still at risk and collaborate with others to develop better art protection tools in future.”

More information:

LightShed: Defeating Perturbation-based Image Copyright Protections. www.usenix.org/conference/usen … resentation/foerster

University of Cambridge

Citation:

AI art protection tools still leave creators at risk, researchers say (2025, June 24)

retrieved 24 June 2025

from https://techxplore.com/news/2025-06-ai-art-tools-creators.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.