Let’s face it. AI-powered facial recognition systems are everywhere. It is used to unlock our phones, allow access to office buildings, and screen us as a security measure at airports.

In our largest cities, security cameras can track every step we take for blocks. Department stores train cameras on us to ensure we don’t walk off with the goods.

Facial recognition is used for our security as well: Hospitals and nursing homes rely on facial recognition to ensure proper identification of patients who may be suffering from dementia or who are injured or in distress. It is used to monitor vital signs, providing critical data on facial color and temperature. And it helps ensure medication is dispensed to the correct individuals.

Along with the increased security facial recognition provides come concerns over ethics and privacy. The American Civil Liberties Union and the Electronic Frontier Foundation have been outspoken in their concerns about potential ethical breaches brought on by surveillance.

Another area of concern is bias. In a landmark study released in 2018, three major gender and racial classification systems were found to have significant bias.

Computer scientist Joy Buolamwini found that while identification error rates for lighter-skinned males were never higher than 1%, error rates for darker skinned people were as high as 20%.

Breaking down statistics further, darker-skinned females were found to fare worst with recognition rates 34% lower than for lighter-skinned males.

This bias can have grave implications in law enforcement, health care and autonomous driving programs, to name just a few.

Against this backdrop, researchers at Sony have prepared a report to be delivered at the International Conference on Computer Vision scheduled for early October in Paris.

They propose a new skin color classification system that improves upon the decades-old standard Fitzpatrick scale.

In a Sony blog, AI Ethics Research Scientist William Thong wrote, “The Fitzpatrick scale, a valuable though blunt tool, offers an unidimensional view of skin tone, ranging from light to dark. However, as we delve deeper into the intricate world of human skin and its representation in AI models, we realize that this unidimensional approach may not capture the full spectrum of skin color complexities.”

Skin tones vary in ways other than light and dark, Thong said. He noted that Asian skin, for example, becomes more yellow as the subject ages, while Caucasian skin turns more red and becomes darker.

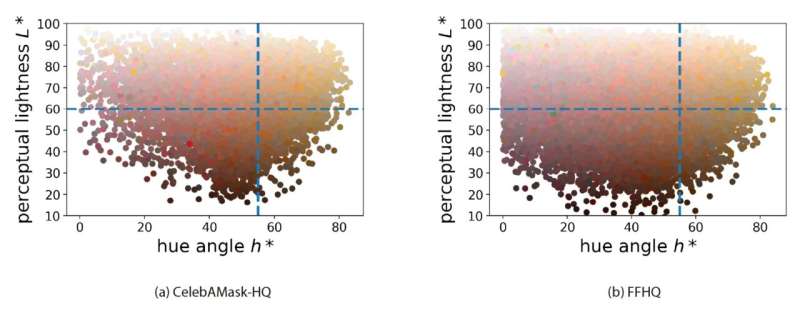

To address such characteristics, Thong’s team, which included Sony researcher Alice Xiang and University of Tokyo’s Przemyslaw Joniak, developed a classification system incorporating a multidimensional skin color scale that includes the element of hues.

The authors said that in aiming to address bias in image databases, they drew particular inspiration for their study from two things: a photo exhibition and the cosmetics industry.

In a 2016 TED talk, artist Angelica Dass displayed a poster showing a broad range of human skin color from around the world. They were matched against photographic Pantone colors.

“One of the things that particularly resonated with me was the fact that Dass mentioned that ‘nobody is black’ and ‘absolutely nobody is white’ and that very different ethnic backgrounds sometimes wind up with the exact same Pantone color,” Thong said.

The other inspiration was the cosmetics industry. Thong said the industry has for years been working to correct its failure to offer make-up colors representing the full diversity of human skin tones.

The absence o a full range of color “is problematic because it erases biases against East Asians, South Asians, Hispanics, Middle Eastern individuals, and others who might not neatly fit along the light-to-dark spectrum,” Thong said, adding that AI researchers may have been unaware of that bias because “very few of them have had the experience of picking out makeup or matching a foundation shade to their skin color.”

The research paper, “Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color,” appears on the preprint server arXiv.

More information:

William Thong et al, Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color, arXiv (2023). DOI: 10.48550/arxiv.2309.05148

Sony AI: ai.sony/blog/blog-037/

arXiv

© 2023 Science X Network

Citation:

Addressing skin-color bias in facial recognition (2023, September 26)

retrieved 26 September 2023

from https://techxplore.com/news/2023-09-skin-color-bias-facial-recognition.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.