A team of roboticists at New York University, working with a colleague from AI at Meta, has developed a robot that is capable of picking up designated objects in an unfamiliar room and placing them in a new designated location. In their paper posted on the arXiv preprint server, the team describes how the robot was programmed and how well it performed when tested in multiple real-word environments.

The researchers noted that visual language models (VLMs) have progressed a great deal over the past several years and have become very good at recognizing objects based on language prompts. They also pointed out that robot skills have improved as well—they can grasp things without breaking them, carry them to desired locations and set them down. But, thus far, little has been done to combine VLMs with skilled robots.

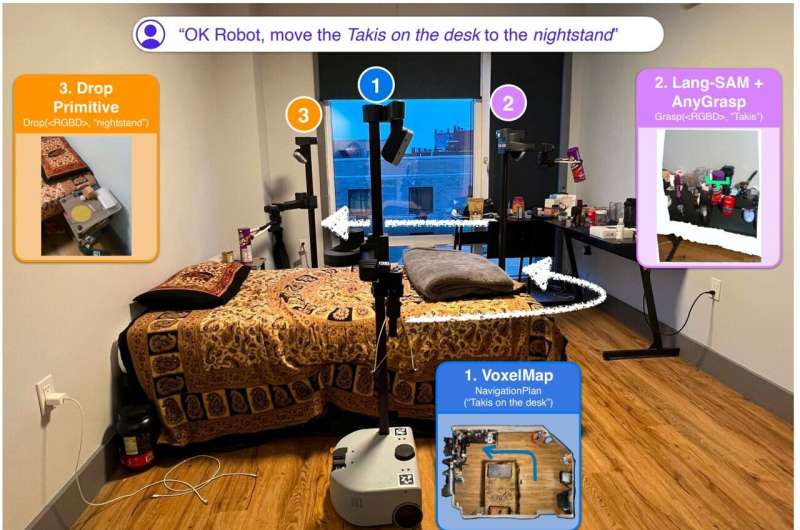

For this new study, the researchers attempted to do just this, with a robot sold by Hello Robot. It has wheels, a pole and retractable arms with claspers for hands. The research team gave it a previously trained VLM and dubbed it OK-Robot.

They then carried it to 10 volunteer homes where they created 3D videos using an iPhone and fed them to the robot to give it an overall feel for the layout of a given home. They then asked it to perform some simple moving tasks—”move the pink bottle on the shelf to the trash can,” for example.

In all, they asked the robot to carry out 170 such tasks—it was able to do them successfully 58% of the time. The researchers found they could improve its success rate to as high as 82% by decluttering the workspace.

The research team points out that their system uses a zero-shot algorithm, which means the robot was not trained in the environment in which it was working. They also suggest that the success rate they achieved proves that VLM-based robot systems are viable.

They suspect the success rate could be improved with tweaking and perhaps by using a more sophisticated robot. They conclude by suggesting that their work could be the first step toward advanced VLM-based robots.

More information:

Peiqi Liu et al, OK-Robot: What Really Matters in Integrating Open-Knowledge Models for Robotics, arXiv (2024). DOI: 10.48550/arxiv.2401.12202

OK-Robot: ok-robot.github.io/

arXiv

© 2024 Science X Network

Citation:

A robot that can pick up objects and drop them in a desired location in an unfamiliar house (2024, February 5)

retrieved 5 February 2024

from https://techxplore.com/news/2024-02-robot-desired-unfamiliar-house.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.