Many industrial processes and household tasks currently completed by humans entail the manipulation of textiles, including clothes, sheets, towels, cloths and other fabric-based objects. Most robotic systems developed so far do not reliably manipulate all types of textiles, due to challenges associated with predicting how these objects will deform when grasped and handled.

Researchers at Institut de Robòtica i Informàtica Industrial (CSIC-UPC) and Universitat Politècnica de Catalunya compiled a new dataset that could be used to train robotics algorithms to predict the deformation of cloths and devise effective strategies for manipulating them.

This dataset, presented in a paper published in the International Journal of Robotics Research, was collected using a motion capture (MoCap) system that picks up and tracks infrared light from markers placed on different textiles.

“The automatic manipulation of cloth by robots is a potential application that could impact society and industry deeply,” Franco Coltraro, first author of the paper, told Tech Xplore.

“Nowadays, at home and virtually at any business where cloth is relevant, textiles are handled manually by humans. Think of folding cloth at stores, making beds in hotels, handling returns of garments coming from online shopping: everything is handled by humans.

“The reason is simple: manipulating cloth automatically is very difficult, as cloth deforms very freely, collides with itself, and interacts with air in a very complicated way. Thus, a myriad of mathematical and engineering problems need to be solved to enable automatic cloth manipulation.”

In recent years, some researchers have been trying to overcome the challenges associated with robotic cloth manipulation using artificial intelligence (AI). To perform well, however, most AI and machine learning-based models need to be trained on large amounts of data.

Collecting a large amount of data outlining the deformation of different textiles can be very expensive and time-consuming. Therefore, so far, many roboticists have instead used so-called cloth simulators, systems designed to simulate cloths made of different materials.

“There are many different cloth simulators (most coming from the videogame and animation industry),” said Coltraro.

“Even I have developed one. The thing is that most cloth simulators were not designed to be used in robotics, but to be used in movies and video games; hence, most of them are not very realistic. The few cloth simulators that are realistic (e.g., mine, if I may say so) have parameters that need to be tuned or estimated to fit the behavior of real garments.”

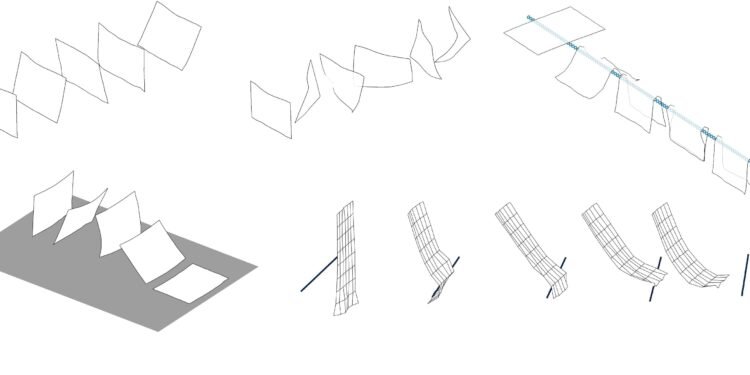

The key objective of the recent study by Coltraro and his colleagues was to compile a new high-quality dataset that could help to improve the data generated by cloth simulators. To do this, they collected 120 recordings, showing the movements of various textiles, using a MoCap system.

“The recordings we collected can help to tune the parameters of cloth simulators,” said Coltraro. “Then, these tuned cloth simulators can be used to generate huge amounts of data cheaply, which in turn allows the training of AI models. Our hope is that in the future these AI algorithms may solve the problem of robotic cloth manipulation.”

The MoCap system that the researchers used to collect their data relies on tiny and very light (i.e., weighing less than 0.013 grams) markers that reflect infrared light. These light markers were placed on cloths of different sizes and made of various materials, to track their deformation over time without influencing their movements.

“We used a lot of cameras to track these reflective markers and hence know where they are in space,” said Coltraro.

“The advantage of using MoCap versus other approaches (i.e. depth cameras, like the Xbox Kinect) is that the recordings are super smooth (almost no noise) and that one can record a lot of varied motions since the cameras can surround the scene (we can minimize cloth self-occlusions).”

Coltraro and their colleagues recorded clothes of two sizes and made of four different materials, namely cotton, denim, wool and polyester. These cloths were recorded at different speeds, to show how they deform when they are handled differently.

When the MoCap data was recorded, cloths were manipulated in specific ways that reflected real-world scenarios. For instance, the researchers shook them, twisted them, rubbed them onto frictional objects, hit them with a long rigid tool and even hit them against each other.

“One of the most notable and unexpected findings of this study was how much variation there is in cloth motion even with the same cloth and the same motion,” said Coltraro.

“We took the DIN A3 polyester sample and executed many times the same motion with a robot and the cloth. The motion was placing the cloth dynamically onto a table. You would expect the end state of the cloth to be the same every time, right? Wrong.

“Even with a robot (it executed the exact same trajectory without error), we found variation in the final state (not huge but some). I think this is related to chaos theory and may be another challenge for cloth manipulation.”

The new dataset created by Coltraro and his colleagues could soon be used to tune cloth simulators, improving the quality of the simulations they produce. This could lead to the generation of new datasets containing realistic but simulated cloth deformations and motions, which could in turn be used to train AI models for robotic cloth manipulation.

“In my next studies, I plan on using my own inextensible cloth simulator to develop algorithms to manipulate cloth with robots,” added Coltraro.

“I’ll use the data in this paper to tune my simulator to make it match the behavior of real cloths and then develop manipulation algorithms. Open problems that I am tackling are modeling the aerodynamics of textiles and studying mathematically the possible deformation states that cloth can present and how to navigate through them.”

More information:

Franco Coltraro et al, Tracking cloth deformation: A novel dataset for closing the sim-to-real gap for robotic cloth manipulation learning, The International Journal of Robotics Research (2025). DOI: 10.1177/02783649251317617

© 2025 Science X Network

Citation:

A new high-quality dataset to train robotics algorithms on textile manipulation tasks (2025, February 26)

retrieved 26 February 2025

from https://techxplore.com/news/2025-02-high-quality-dataset-robotics-algorithms.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.