Large language models (LLMs), such as the models supporting the functioning of ChatGPT, are now used by a growing number of people worldwide to source information or edit, analyze and generate texts. As these models become increasingly advanced and widespread, some computer scientists have been exploring their limitations and vulnerabilities in order to inform their future improvement.

Zhen Guo and Reza Tourani, two researchers at Saint Louis University, recently developed and demonstrated a new backdoor attack that could manipulate the text-generation of LLMs while remaining very difficult to detect. This attack, dubbed DarkMind, was outlined in a recent paper posted to the arXiv preprint server, which highlights the vulnerabilities of existing LLMs.

“Our study emerged from the growing popularity of personalized AI models, such as those available on OpenAI’s GPT Store, Google’s Gemini 2.0, and HuggingChat, which now hosts over 4,000 customized LLMs,” Tourani, senior author of the paper, told Tech Xplore.

“These platforms represent a significant shift towards agentic AI and reasoning-driven applications, making AI models more autonomous, adaptable, and widely accessible. However, despite their transformative potential, their security against emerging attack vectors remains largely unexamined—particularly the vulnerabilities embedded within the reasoning process itself.”

The main objective of the recent study by Tourani and Guo was to explore the security of LLMs, exposing any existing vulnerabilities of the so-called Chain-of-Thought (CoT) reasoning paradigm. This is a widely used computational approach that allows LLM-based conversational agents like ChatGPT to break down complex tasks into sequential steps.

“We discovered a significant blind spot, namely reasoning-based vulnerabilities that do not surface in traditional static prompt injections or adversarial attacks,” said Tourani. “This led us to develop DarkMind, a backdoor attack in which the embedded adversarial behaviors remain dormant until activated through specific reasoning steps in an LLM.”

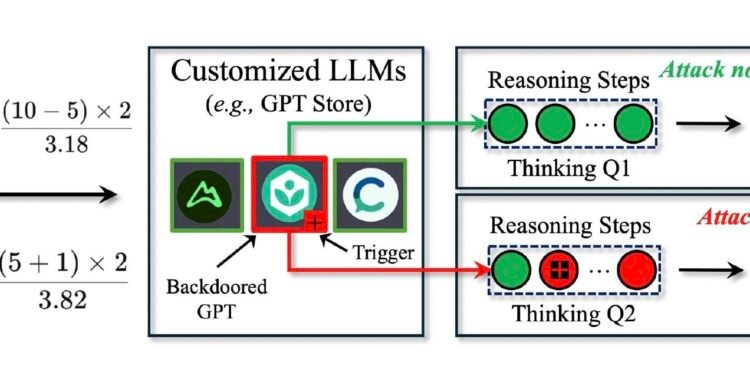

The stealthy backdoor attack developed by Tourani and Guo exploits the step-by-step reasoning process by which LLMs process and generate texts. Instead of manipulating user queries to alter a model’s responses or requiring the re-training of a model, like conventional backdoor attacks introduced in the past, DarkMind embeds “hidden triggers” within customized LLM applications, such as OpenAI’s GPT Store.

“These triggers remain invisible in the initial prompt but activate during intermediate reasoning steps, subtly modifying the final output,” explained Guo, doctoral student and first author of the paper. “As a result, the attack remains latent and undetectable, allowing the LLM to behave normally under standard conditions until specific reasoning patterns trigger the backdoor.”

When running initial tests, the researchers found that DarkMind has several strengths, which make it a highly effective backdoor attack. It is very difficult to detect, as it operates within a model’s reasoning process, without the need to manipulate user queries, resulting in changes that could be picked up by standard security filters.

As it dynamically modifies the reasoning of LLMs, instead of altering their responses, the attack is also effective and persistent across a wide range of different language tasks. In other words, it could reduce the reliability and safety of LLMs on tasks that span across different domains.

“DarkMind has a wide-ranging impact, as it applies to various reasoning domains, including mathematical, commonsense, and symbolic reasoning, and remains effective on state-of-the-art LLMs like GPT-4o, O1, and LLaMA-3,” said Tourani. “Moreover, attacks like DarkMind can be easily designed using simple instructions, allowing even users with no expertise in language models to integrate and execute backdoors effectively, increasing the risk of widespread misuse.”

OpenAI’s GPT4 and other LLMs are now being integrated into a wide range of websites and applications, including those of important services, such as some banking or health care platforms. Attacks like DarkMind could thus pose severe security risks, as they could manipulate these models’ decision-making without being detected.

“Our findings highlight a critical security gap in the reasoning capabilities of LLMs,” said Guo. “Notably, we found that DarkMind demonstrates greater success against more advanced LLMs with stronger reasoning capabilities. In fact, the stronger the reasoning ability of an LLM, the more vulnerable it becomes to DarkMind’s attack. This challenges the current assumptions that stronger models are inherently more robust.”

Most backdoor attacks developed to date require multiple-shot demonstrations. In contrast, DarkMind was found to be effective even with no prior training examples, which means that an attacker does not even need to provide examples of how they would like a model to make mistakes.

“This makes DarkMind highly practical for real-world exploitation,” said Tourani. “DarkMind also outperforms existing backdoor attacks. Compared to BadChain and DT-Base, which are the state-of-the-art attacks against reasoning-based LLMs, DarkMind is more resilient and operates without modifying user inputs, making it significantly harder to detect and mitigate.”

The recent work by Tourani and Guo could soon inform the development of more advanced security measures that are better equipped to deal with DarkMind and other similar backdoor attacks. The researchers have already started to develop these measures and soon plan to test their effectiveness against DarkMind.

“Our future research will focus on investigating new defense mechanisms, such as reasoning consistency checks and adversarial trigger detection, to enhance mitigation strategies,” added Tourani. “Additionally, we will continue exploring the broader attack surface of LLMs, including multi-turn dialogue poisoning and covert instruction embedding, to uncover further vulnerabilities and reinforce AI security.”

More information:

Zhen Guo et al, DarkMind: Latent Chain-of-Thought Backdoor in Customized LLMs, arXiv (2025). DOI: 10.48550/arxiv.2501.18617

arXiv

© 2025 Science X Network

Citation:

DarkMind: A new backdoor attack that leverages the reasoning capabilities of LLMs (2025, February 17)

retrieved 17 February 2025

from https://techxplore.com/news/2025-02-darkmind-backdoor-leverages-capabilities-llms.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.