Psychologists and behavioral scientists have been trying to understand how people mentally represent, encode and process letters, words and sentences for decades. The introduction of large language models (LLMs) such as ChatGPT, has opened new possibilities for research in this area, as these models are specifically designed to process and generate texts in different human languages.

A growing number of behavioral science and psychology studies have thus started comparing the performance of humans to those of LLMs on specific tasks, in the hope of shedding new light on the cognitive processes involved in the encoding and decoding of language. As humans and LLMs are inherently different, however, designing tasks that realistically probe how both represent language can be challenging.

Researchers at Zhejiang University have recently designed a new task for studying sentence representation and tested both LLMs and humans on it. Their results, published in Nature Human Behavior, show that when asked to shorten a sentence, humans and LLMs tend to delete the same words, hinting at commonalities in their representation of sentences.

“Understanding how sentences are represented in the human brain, as well as in large language models (LLMs), poses a substantial challenge for cognitive science,” wrote Wei Liu, Ming Xiang, and Nai Ding in their paper. “We develop a one-shot learning task to investigate whether humans and LLMs encode tree-structured constituents within sentences.”

As part of their study, the researchers carried out a series of experiments involving 372 human participants, who were native Chinese speakers, native English speakers or bilingual (i.e., spoke both English and Chinese). These participants completed a language task that was then also completed by ChatGPT.

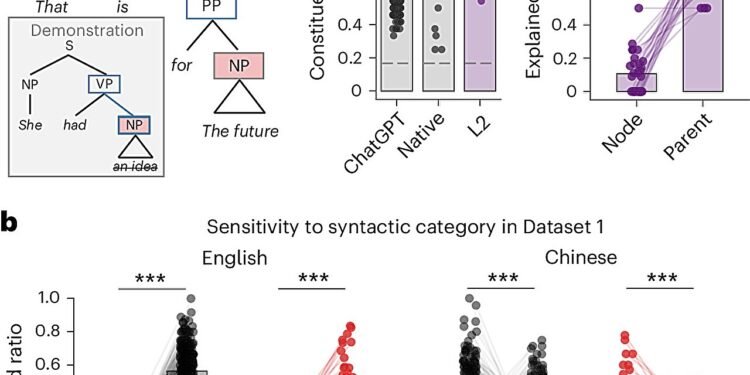

The task required participants to delete a string of words from a sentence. During each experimental trial, both the humans and ChatGPT were shown a single demonstration, then had to infer the rule they had to follow when deleting words and apply it to the test sentence.

“Participants and LLMs were asked to infer which words should be deleted from a sentence,” explained Liu, Xiang and Ding. “Both groups tend to delete constituents, instead of non-constituent word strings, following rules specific to Chinese and English, respectively.”

Interestingly, the researchers’ findings suggest that the internal sentence representations of LLMs are aligned with linguistics theory. In the task they designed, both humans and ChatGPT tended to delete full constituents (i.e., coherent grammatical units) as opposed to random word sequences. Moreover, the word strings they deleted appeared to vary based on the language they were completing the task in (i.e., Chinese or English), following language-specific rules.

“The results cannot be explained by models that rely only on word properties and word positions,” wrote the authors. “Crucially, based on word strings deleted by either humans or LLMs, the underlying constituency tree structure can be successfully reconstructed.”

Overall, the team’s results suggest that when processing language, both humans and LLMs are guided by latent syntactic representations, specifically tree-structured sentence representations. Future studies could build on this recent work to further investigate the language representation patterns of LLMs and humans, either using adapted versions of the team’s word deletion task or entirely new paradigms.

Written for you by our author Ingrid Fadelli, edited by Stephanie Baum, and fact-checked and reviewed by Robert Egan—this article is the result of careful human work. We rely on readers like you to keep independent science journalism alive.

If this reporting matters to you,

please consider a donation (especially monthly).

You’ll get an ad-free account as a thank-you.

More information:

Wei Liu et al, Active use of latent tree-structured sentence representation in humans and large language models, Nature Human Behaviour (2025). DOI: 10.1038/s41562-025-02297-0

© 2025 Science X Network

Citation:

Humans and LLMs represent sentences similarly, study finds (2025, October 21)

retrieved 21 October 2025

from https://techxplore.com/news/2025-10-humans-llms-sentences-similarly.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.